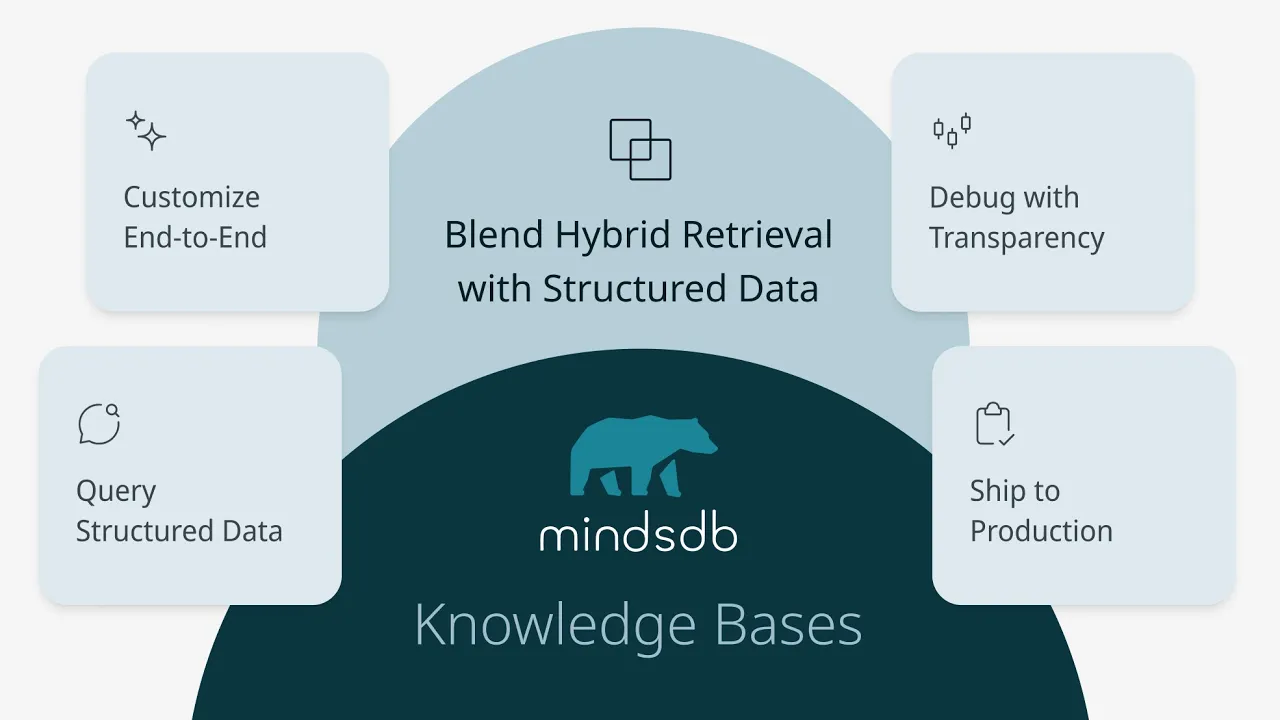

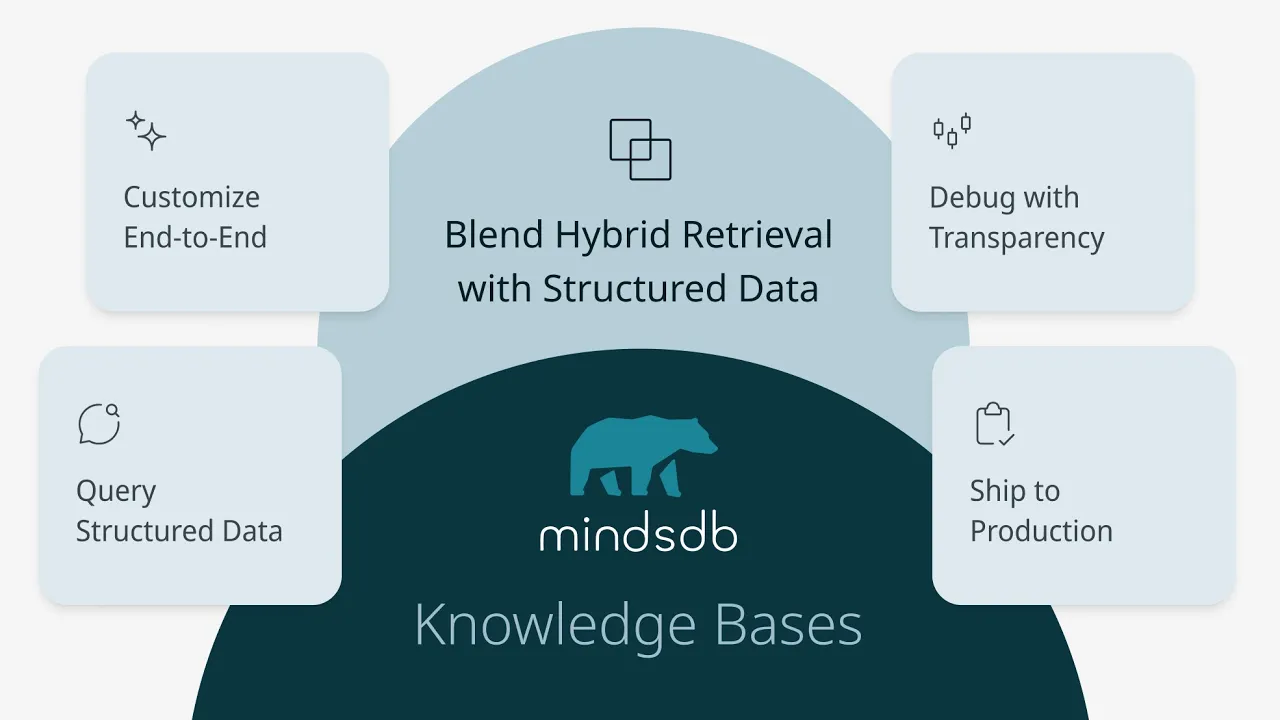

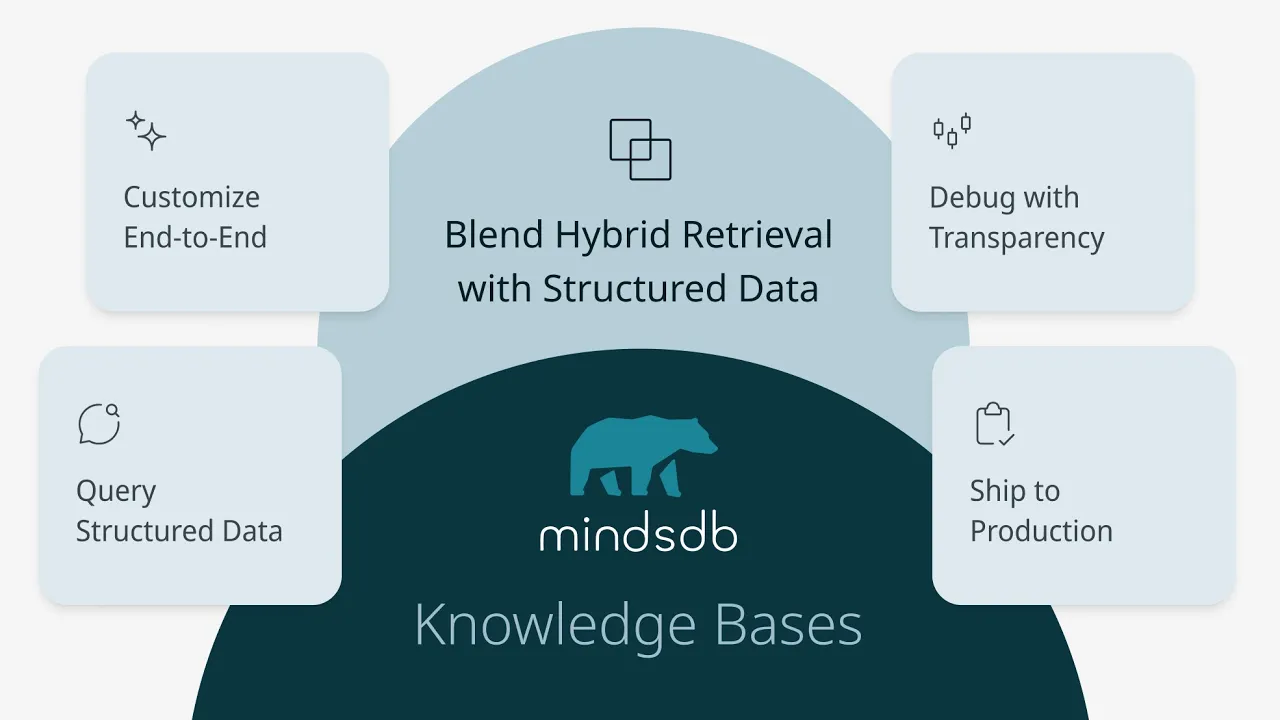

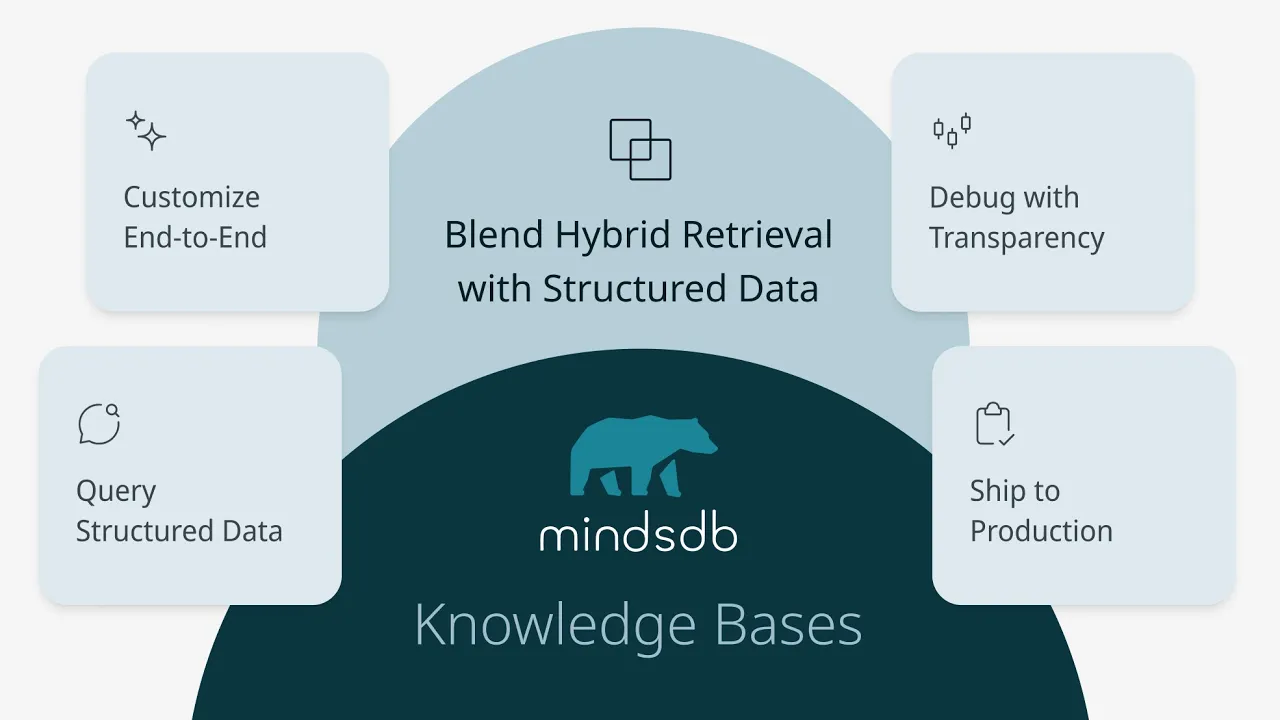

Blend Hybrid Retrieval with Structured Data using MindsDB Knowledge Bases

Blend Hybrid Retrieval with Structured Data using MindsDB Knowledge Bases

Andriy Burkov, Ph.D. & Author, MindsDB Advisor

Nov 26, 2025

This tutorial is a follow-up to this tutorial, where we took the first steps in creating and using a MindsDB Knowledge Base feature. In this follow-up project, we will walk through creating a semantic search knowledge base using the famous Enron Emails Dataset. While in the previous tutorial, we simply used an existing dataset, in this one, we'll preprocess the original dataset by extracting structured attributes (also known as metadata) from it using Named Entity Recognition (NER). We will then create a knowledge base and perform both semantic and metadata-filtered searches.

Before we get our hands dirty, let's refresh some basics. Download the webinar code and materials here to follow along the tutorial.

1. Introduction to Knowledge Bases in MindsDB

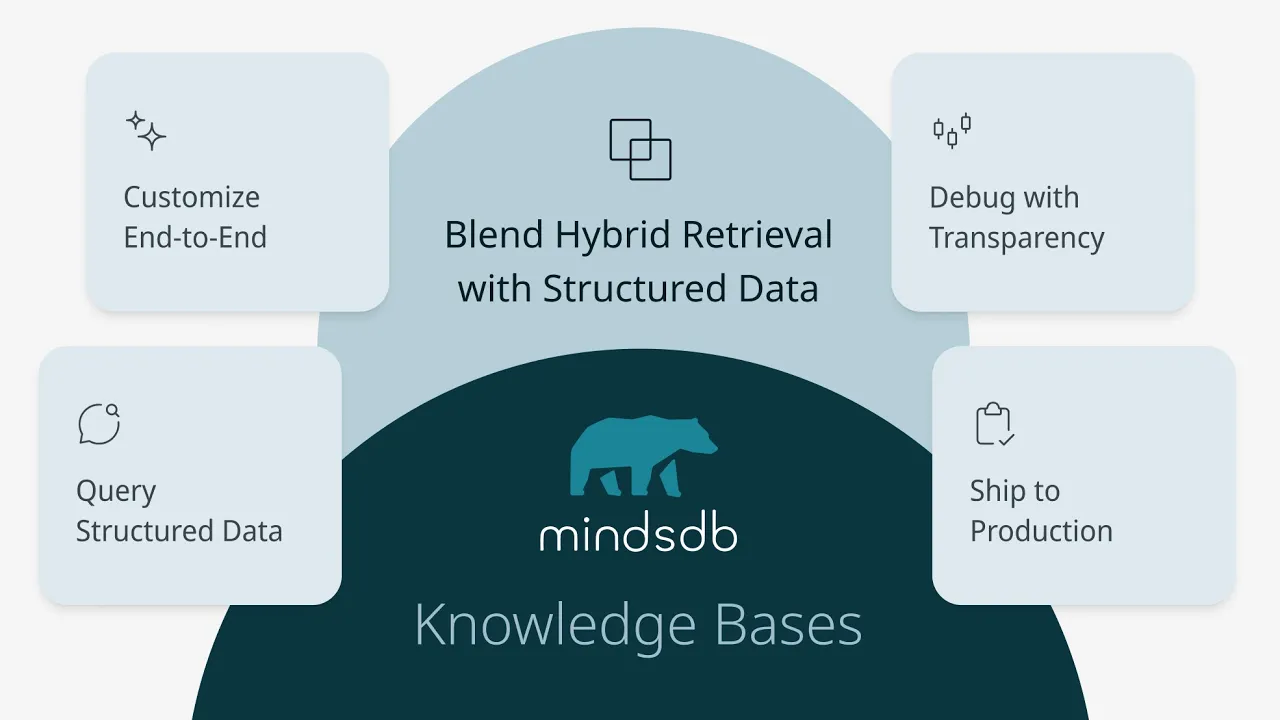

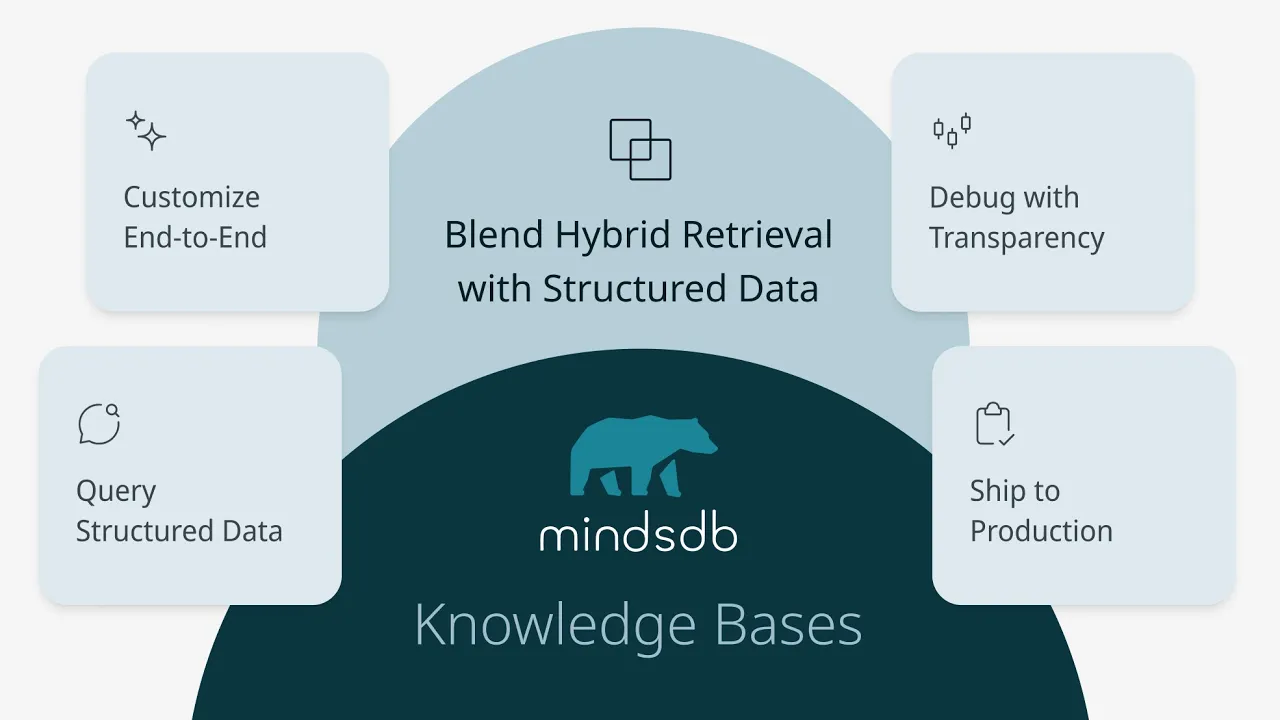

Knowledge Bases (KB) in MindsDB provide advanced semantic search capabilities, allowing you to find information based on meaning rather than just keywords. They use embedding models to convert text into vector representations and store them in vector databases for efficient similarity searches.

In addition to searching for knowledge nuggets using semantic similarity (soft search criteria), MindsDB KBs allow the user to combine both soft search criteria with hard ones called "metadata," which can be seen as regular relational database table columns.

In this tutorial, we assume that the user has a free open-source MindsDB instance running in their local environment. Please follow these steps to set it up.

To demonstrate both soft and hard searches in a MindsDB KB, we'll use the Enron Corpus - one of the largest publicly available collections of corporate emails, containing over 500,000 emails from Enron executives during the years leading up to the company's collapse in 2001. This dataset is particularly interesting because it contains real business communications, including scandal-related content, making it perfect for demonstrating knowledge base search capabilities.

Named Entity Recognition is the technique we'll use to automatically extract those structured attributes—such as people, organizations, dates, and locations—from the raw email text. These extracted entities will become the metadata columns in our knowledge base, allowing us not only to search semantically by meaning, but also to filter results using precise, structured criteria like sender, company, or time period.

2. Settings Things Up

2.1 Dependencies Installation

First, let's install the dependencies and set up the NER. We will use SpaCy for this, since its pretrained models can automatically extract entities like people, organizations, dates, and locations from the raw email text. Those extracted entities will then be transformed into structured metadata columns, which we’ll store alongside the email content and later use to power rich, metadata-aware queries in our MindsDB knowledge base.

!pip install mindsdb mindsdb_sdk pandas requests datasets yaspin spacy # Download spaCy English model for Named Entity Recognition !python -m spacy download en_core_web_sm print("✅ Dependencies installed successfully!")

!pip install mindsdb mindsdb_sdk pandas requests datasets yaspin spacy # Download spaCy English model for Named Entity Recognition !python -m spacy download en_core_web_sm print("✅ Dependencies installed successfully!")

!pip install mindsdb mindsdb_sdk pandas requests datasets yaspin spacy # Download spaCy English model for Named Entity Recognition !python -m spacy download en_core_web_sm print("✅ Dependencies installed successfully!")

!pip install mindsdb mindsdb_sdk pandas requests datasets yaspin spacy # Download spaCy English model for Named Entity Recognition !python -m spacy download en_core_web_sm print("✅ Dependencies installed successfully!")

2.2 Dataset Selection and Download

We'll will download the Enron email's dataset from Hugging Face, which is a large collection of real-world corporate messages from the Enron corpus, paired with their original subject lines and cleaned body text. Each entry includes the email’s metadata (such as sender, recipients, and timestamp) along with the full message content, organized into standard train/validation/test splits so the dataset be uses for tasks like summarization, classification, or downstream NLP experiments.

For our tutorial purposes, we will only use the train fraction of the dataset.

# Download Enron Emails Dataset from Hugging Face from datasets import load_dataset import pandas as pd import re from datetime import datetime import json # Load the Enron dataset (536k emails) print("Downloading Enron emails dataset...") dataset = load_dataset("snoop2head/enron_aeslc_emails", split="train") df = pd.DataFrame(dataset) print(f"Dataset shape: {df.shape}") print("Dataset columns:", df.columns.tolist()) def parse_email_text(email_text): """Parse raw email text to extract subject, body, and metadata""" if pd.isna(email_text) or email_text == '': return {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} email_text = str(email_text) # Initialize result dictionary parsed = {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} # Extract subject subject_match = re.search(r'Subject:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if subject_match: parsed['subject'] = subject_match.group(1).strip() # Extract from from_match = re.search(r'From:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if from_match: parsed['from'] = from_match.group(1).strip() # Extract to to_match = re.search(r'To:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if to_match: parsed['to'] = to_match.group(1).strip() # Extract date date_match = re.search(r'Date:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if date_match: parsed['date'] = date_match.group(1).strip() # Extract body (everything after the headers) # Look for the end of headers (usually marked by double newline or start of actual content) header_end = re.search(r'\n\s*\n', email_text) if header_end: parsed['body'] = email_text[header_end.end():].strip() else: # Fallback: try to find content after common header patterns body_start = re.search(r'(?:Subject:.*?\n.*?\n|X-.*?\n)', email_text, re.DOTALL) if body_start: parsed['body'] = email_text[body_start.end():].strip() else: parsed['body'] = email_text # Clean up body text parsed['body'] = re.sub(r'\n+', ' ', parsed['body']) parsed['body'] = re.sub(r'\s+', ' ', parsed['body']) return parsed # Parse first few emails to understand structure print("Parsing email structure...") sample_emails = df.head(10) parsed_samples = [] for idx, row in sample_emails.iterrows(): # The dataset might have different column names, let's check email_content = None for col in df.columns: if row[col] and len(str(row[col])) > 100: # Find the column with email content email_content = row[col] break if email_content: parsed = parse_email_text(email_content) parsed['email_id'] = f"email_{idx:06d}" parsed_samples.append(parsed)

# Download Enron Emails Dataset from Hugging Face from datasets import load_dataset import pandas as pd import re from datetime import datetime import json # Load the Enron dataset (536k emails) print("Downloading Enron emails dataset...") dataset = load_dataset("snoop2head/enron_aeslc_emails", split="train") df = pd.DataFrame(dataset) print(f"Dataset shape: {df.shape}") print("Dataset columns:", df.columns.tolist()) def parse_email_text(email_text): """Parse raw email text to extract subject, body, and metadata""" if pd.isna(email_text) or email_text == '': return {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} email_text = str(email_text) # Initialize result dictionary parsed = {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} # Extract subject subject_match = re.search(r'Subject:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if subject_match: parsed['subject'] = subject_match.group(1).strip() # Extract from from_match = re.search(r'From:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if from_match: parsed['from'] = from_match.group(1).strip() # Extract to to_match = re.search(r'To:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if to_match: parsed['to'] = to_match.group(1).strip() # Extract date date_match = re.search(r'Date:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if date_match: parsed['date'] = date_match.group(1).strip() # Extract body (everything after the headers) # Look for the end of headers (usually marked by double newline or start of actual content) header_end = re.search(r'\n\s*\n', email_text) if header_end: parsed['body'] = email_text[header_end.end():].strip() else: # Fallback: try to find content after common header patterns body_start = re.search(r'(?:Subject:.*?\n.*?\n|X-.*?\n)', email_text, re.DOTALL) if body_start: parsed['body'] = email_text[body_start.end():].strip() else: parsed['body'] = email_text # Clean up body text parsed['body'] = re.sub(r'\n+', ' ', parsed['body']) parsed['body'] = re.sub(r'\s+', ' ', parsed['body']) return parsed # Parse first few emails to understand structure print("Parsing email structure...") sample_emails = df.head(10) parsed_samples = [] for idx, row in sample_emails.iterrows(): # The dataset might have different column names, let's check email_content = None for col in df.columns: if row[col] and len(str(row[col])) > 100: # Find the column with email content email_content = row[col] break if email_content: parsed = parse_email_text(email_content) parsed['email_id'] = f"email_{idx:06d}" parsed_samples.append(parsed)

# Download Enron Emails Dataset from Hugging Face from datasets import load_dataset import pandas as pd import re from datetime import datetime import json # Load the Enron dataset (536k emails) print("Downloading Enron emails dataset...") dataset = load_dataset("snoop2head/enron_aeslc_emails", split="train") df = pd.DataFrame(dataset) print(f"Dataset shape: {df.shape}") print("Dataset columns:", df.columns.tolist()) def parse_email_text(email_text): """Parse raw email text to extract subject, body, and metadata""" if pd.isna(email_text) or email_text == '': return {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} email_text = str(email_text) # Initialize result dictionary parsed = {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} # Extract subject subject_match = re.search(r'Subject:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if subject_match: parsed['subject'] = subject_match.group(1).strip() # Extract from from_match = re.search(r'From:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if from_match: parsed['from'] = from_match.group(1).strip() # Extract to to_match = re.search(r'To:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if to_match: parsed['to'] = to_match.group(1).strip() # Extract date date_match = re.search(r'Date:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if date_match: parsed['date'] = date_match.group(1).strip() # Extract body (everything after the headers) # Look for the end of headers (usually marked by double newline or start of actual content) header_end = re.search(r'\n\s*\n', email_text) if header_end: parsed['body'] = email_text[header_end.end():].strip() else: # Fallback: try to find content after common header patterns body_start = re.search(r'(?:Subject:.*?\n.*?\n|X-.*?\n)', email_text, re.DOTALL) if body_start: parsed['body'] = email_text[body_start.end():].strip() else: parsed['body'] = email_text # Clean up body text parsed['body'] = re.sub(r'\n+', ' ', parsed['body']) parsed['body'] = re.sub(r'\s+', ' ', parsed['body']) return parsed # Parse first few emails to understand structure print("Parsing email structure...") sample_emails = df.head(10) parsed_samples = [] for idx, row in sample_emails.iterrows(): # The dataset might have different column names, let's check email_content = None for col in df.columns: if row[col] and len(str(row[col])) > 100: # Find the column with email content email_content = row[col] break if email_content: parsed = parse_email_text(email_content) parsed['email_id'] = f"email_{idx:06d}" parsed_samples.append(parsed)

# Download Enron Emails Dataset from Hugging Face from datasets import load_dataset import pandas as pd import re from datetime import datetime import json # Load the Enron dataset (536k emails) print("Downloading Enron emails dataset...") dataset = load_dataset("snoop2head/enron_aeslc_emails", split="train") df = pd.DataFrame(dataset) print(f"Dataset shape: {df.shape}") print("Dataset columns:", df.columns.tolist()) def parse_email_text(email_text): """Parse raw email text to extract subject, body, and metadata""" if pd.isna(email_text) or email_text == '': return {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} email_text = str(email_text) # Initialize result dictionary parsed = {'subject': '', 'body': '', 'from': '', 'to': '', 'date': ''} # Extract subject subject_match = re.search(r'Subject:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if subject_match: parsed['subject'] = subject_match.group(1).strip() # Extract from from_match = re.search(r'From:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if from_match: parsed['from'] = from_match.group(1).strip() # Extract to to_match = re.search(r'To:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if to_match: parsed['to'] = to_match.group(1).strip() # Extract date date_match = re.search(r'Date:\s*(.*?)(?:\n|$)', email_text, re.IGNORECASE) if date_match: parsed['date'] = date_match.group(1).strip() # Extract body (everything after the headers) # Look for the end of headers (usually marked by double newline or start of actual content) header_end = re.search(r'\n\s*\n', email_text) if header_end: parsed['body'] = email_text[header_end.end():].strip() else: # Fallback: try to find content after common header patterns body_start = re.search(r'(?:Subject:.*?\n.*?\n|X-.*?\n)', email_text, re.DOTALL) if body_start: parsed['body'] = email_text[body_start.end():].strip() else: parsed['body'] = email_text # Clean up body text parsed['body'] = re.sub(r'\n+', ' ', parsed['body']) parsed['body'] = re.sub(r'\s+', ' ', parsed['body']) return parsed # Parse first few emails to understand structure print("Parsing email structure...") sample_emails = df.head(10) parsed_samples = [] for idx, row in sample_emails.iterrows(): # The dataset might have different column names, let's check email_content = None for col in df.columns: if row[col] and len(str(row[col])) > 100: # Find the column with email content email_content = row[col] break if email_content: parsed = parse_email_text(email_content) parsed['email_id'] = f"email_{idx:06d}" parsed_samples.append(parsed)

Downloading Enron emails dataset... Dataset shape: (535703, 1) Dataset columns: ['text'] Parsing email structure

Downloading Enron emails dataset... Dataset shape: (535703, 1) Dataset columns: ['text'] Parsing email structure

Downloading Enron emails dataset... Dataset shape: (535703, 1) Dataset columns: ['text'] Parsing email structure

Downloading Enron emails dataset... Dataset shape: (535703, 1) Dataset columns: ['text'] Parsing email structure

Now let's print some records to see what's inside:

df_parsed_sample = pd.DataFrame(parsed_samples) print("\nSample of parsed emails:") print("="*100) for idx, row in df_parsed_sample.head(5).iterrows(): print(f"\nEmail #{idx+1}") print(f"ID: {row['email_id']}") print(f"From: {row['from'][:80]}{'...' if len(row['from']) > 80 else ''}") print(f"To: {row['to'][:80]}{'...' if len(row['to']) > 80 else ''}") print(f"Date: {row['date']}") print(f"Subject: {row['subject']}") print(f"Body Preview: {row['body'][:200]}{'...' if len(row['body']) > 200 else ''}") print("-" * 80) print(f"\nSuccessfully parsed email structure!") print(f"Columns extracted: {df_parsed_sample.columns.tolist()}")

df_parsed_sample = pd.DataFrame(parsed_samples) print("\nSample of parsed emails:") print("="*100) for idx, row in df_parsed_sample.head(5).iterrows(): print(f"\nEmail #{idx+1}") print(f"ID: {row['email_id']}") print(f"From: {row['from'][:80]}{'...' if len(row['from']) > 80 else ''}") print(f"To: {row['to'][:80]}{'...' if len(row['to']) > 80 else ''}") print(f"Date: {row['date']}") print(f"Subject: {row['subject']}") print(f"Body Preview: {row['body'][:200]}{'...' if len(row['body']) > 200 else ''}") print("-" * 80) print(f"\nSuccessfully parsed email structure!") print(f"Columns extracted: {df_parsed_sample.columns.tolist()}")

df_parsed_sample = pd.DataFrame(parsed_samples) print("\nSample of parsed emails:") print("="*100) for idx, row in df_parsed_sample.head(5).iterrows(): print(f"\nEmail #{idx+1}") print(f"ID: {row['email_id']}") print(f"From: {row['from'][:80]}{'...' if len(row['from']) > 80 else ''}") print(f"To: {row['to'][:80]}{'...' if len(row['to']) > 80 else ''}") print(f"Date: {row['date']}") print(f"Subject: {row['subject']}") print(f"Body Preview: {row['body'][:200]}{'...' if len(row['body']) > 200 else ''}") print("-" * 80) print(f"\nSuccessfully parsed email structure!") print(f"Columns extracted: {df_parsed_sample.columns.tolist()}")

df_parsed_sample = pd.DataFrame(parsed_samples) print("\nSample of parsed emails:") print("="*100) for idx, row in df_parsed_sample.head(5).iterrows(): print(f"\nEmail #{idx+1}") print(f"ID: {row['email_id']}") print(f"From: {row['from'][:80]}{'...' if len(row['from']) > 80 else ''}") print(f"To: {row['to'][:80]}{'...' if len(row['to']) > 80 else ''}") print(f"Date: {row['date']}") print(f"Subject: {row['subject']}") print(f"Body Preview: {row['body'][:200]}{'...' if len(row['body']) > 200 else ''}") print("-" * 80) print(f"\nSuccessfully parsed email structure!") print(f"Columns extracted: {df_parsed_sample.columns.tolist()}")

Sample of parsed emails: ==================================================================================================== Email #1 ID: email_000000 From: phillip.allen@enron.com To: tim.belden@enron.com Date: Mon, 14 May 2001 16:39:00 -0700 (PDT) Subject: Body: Body Preview: Here is our forecast -------------------------------------------------------------------------------- Email #2 ID: email_000001 From: phillip.allen@enron.com To: john.lavorato@enron.com Date: Fri, 4 May 2001 13:51:00 -0700 (PDT) Subject: Re: Body Preview: Traveling to have a business meeting takes the fun out of the trip. Especially if you have to prepare a presentation. I would suggest holding the business plan meetings here then take a trip without a... -------------------------------------------------------------------------------- Email #3 ID: email_000002 From: phillip.allen@enron.com To: leah.arsdall@enron.com Date: Wed, 18 Oct 2000 03:00:00 -0700 (PDT) Subject: Re: test Body Preview: test successful. way to go!!! -------------------------------------------------------------------------------- Email #4 ID: email_000003 From: phillip.allen@enron.com To: randall.gay@enron.com Date: Mon, 23 Oct 2000 06:13:00 -0700 (PDT) Subject: Body: Body Preview: Randy, Can you send me a schedule of the salary and level of everyone in the scheduling group. Plus your thoughts on any changes that need to be made. (Patti S for example) Phillip -------------------------------------------------------------------------------- Email #5 ID: email_000004 From: phillip.allen@enron.com To: greg.piper@enron.com Date: Thu, 31 Aug 2000 05:07:00 -0700 (PDT) Subject: Re: Hello Body Preview: Let's shoot for Tuesday at 11:45. -------------------------------------------------------------------------------- Successfully parsed email structure! Columns extracted: ['subject', 'body', 'from', 'to', 'date', 'email_id']

Sample of parsed emails: ==================================================================================================== Email #1 ID: email_000000 From: phillip.allen@enron.com To: tim.belden@enron.com Date: Mon, 14 May 2001 16:39:00 -0700 (PDT) Subject: Body: Body Preview: Here is our forecast -------------------------------------------------------------------------------- Email #2 ID: email_000001 From: phillip.allen@enron.com To: john.lavorato@enron.com Date: Fri, 4 May 2001 13:51:00 -0700 (PDT) Subject: Re: Body Preview: Traveling to have a business meeting takes the fun out of the trip. Especially if you have to prepare a presentation. I would suggest holding the business plan meetings here then take a trip without a... -------------------------------------------------------------------------------- Email #3 ID: email_000002 From: phillip.allen@enron.com To: leah.arsdall@enron.com Date: Wed, 18 Oct 2000 03:00:00 -0700 (PDT) Subject: Re: test Body Preview: test successful. way to go!!! -------------------------------------------------------------------------------- Email #4 ID: email_000003 From: phillip.allen@enron.com To: randall.gay@enron.com Date: Mon, 23 Oct 2000 06:13:00 -0700 (PDT) Subject: Body: Body Preview: Randy, Can you send me a schedule of the salary and level of everyone in the scheduling group. Plus your thoughts on any changes that need to be made. (Patti S for example) Phillip -------------------------------------------------------------------------------- Email #5 ID: email_000004 From: phillip.allen@enron.com To: greg.piper@enron.com Date: Thu, 31 Aug 2000 05:07:00 -0700 (PDT) Subject: Re: Hello Body Preview: Let's shoot for Tuesday at 11:45. -------------------------------------------------------------------------------- Successfully parsed email structure! Columns extracted: ['subject', 'body', 'from', 'to', 'date', 'email_id']

Sample of parsed emails: ==================================================================================================== Email #1 ID: email_000000 From: phillip.allen@enron.com To: tim.belden@enron.com Date: Mon, 14 May 2001 16:39:00 -0700 (PDT) Subject: Body: Body Preview: Here is our forecast -------------------------------------------------------------------------------- Email #2 ID: email_000001 From: phillip.allen@enron.com To: john.lavorato@enron.com Date: Fri, 4 May 2001 13:51:00 -0700 (PDT) Subject: Re: Body Preview: Traveling to have a business meeting takes the fun out of the trip. Especially if you have to prepare a presentation. I would suggest holding the business plan meetings here then take a trip without a... -------------------------------------------------------------------------------- Email #3 ID: email_000002 From: phillip.allen@enron.com To: leah.arsdall@enron.com Date: Wed, 18 Oct 2000 03:00:00 -0700 (PDT) Subject: Re: test Body Preview: test successful. way to go!!! -------------------------------------------------------------------------------- Email #4 ID: email_000003 From: phillip.allen@enron.com To: randall.gay@enron.com Date: Mon, 23 Oct 2000 06:13:00 -0700 (PDT) Subject: Body: Body Preview: Randy, Can you send me a schedule of the salary and level of everyone in the scheduling group. Plus your thoughts on any changes that need to be made. (Patti S for example) Phillip -------------------------------------------------------------------------------- Email #5 ID: email_000004 From: phillip.allen@enron.com To: greg.piper@enron.com Date: Thu, 31 Aug 2000 05:07:00 -0700 (PDT) Subject: Re: Hello Body Preview: Let's shoot for Tuesday at 11:45. -------------------------------------------------------------------------------- Successfully parsed email structure! Columns extracted: ['subject', 'body', 'from', 'to', 'date', 'email_id']

Sample of parsed emails: ==================================================================================================== Email #1 ID: email_000000 From: phillip.allen@enron.com To: tim.belden@enron.com Date: Mon, 14 May 2001 16:39:00 -0700 (PDT) Subject: Body: Body Preview: Here is our forecast -------------------------------------------------------------------------------- Email #2 ID: email_000001 From: phillip.allen@enron.com To: john.lavorato@enron.com Date: Fri, 4 May 2001 13:51:00 -0700 (PDT) Subject: Re: Body Preview: Traveling to have a business meeting takes the fun out of the trip. Especially if you have to prepare a presentation. I would suggest holding the business plan meetings here then take a trip without a... -------------------------------------------------------------------------------- Email #3 ID: email_000002 From: phillip.allen@enron.com To: leah.arsdall@enron.com Date: Wed, 18 Oct 2000 03:00:00 -0700 (PDT) Subject: Re: test Body Preview: test successful. way to go!!! -------------------------------------------------------------------------------- Email #4 ID: email_000003 From: phillip.allen@enron.com To: randall.gay@enron.com Date: Mon, 23 Oct 2000 06:13:00 -0700 (PDT) Subject: Body: Body Preview: Randy, Can you send me a schedule of the salary and level of everyone in the scheduling group. Plus your thoughts on any changes that need to be made. (Patti S for example) Phillip -------------------------------------------------------------------------------- Email #5 ID: email_000004 From: phillip.allen@enron.com To: greg.piper@enron.com Date: Thu, 31 Aug 2000 05:07:00 -0700 (PDT) Subject: Re: Hello Body Preview: Let's shoot for Tuesday at 11:45. -------------------------------------------------------------------------------- Successfully parsed email structure! Columns extracted: ['subject', 'body', 'from', 'to', 'date', 'email_id']

The data looks good, so now, let's load SpaCy and its pretrained NLP model for English:

import spacy from spacy import displacy # Load spaCy model for NER print("Loading spaCy model for Named Entity Recognition...") nlp = spacy.load("en_core_web_sm") try: nlp = spacy.load("en_core_web_sm") print("spaCy model 'en_core_web_sm' loaded successfully!") except OSError as e: print("Failed to load spaCy model:", e)

import spacy from spacy import displacy # Load spaCy model for NER print("Loading spaCy model for Named Entity Recognition...") nlp = spacy.load("en_core_web_sm") try: nlp = spacy.load("en_core_web_sm") print("spaCy model 'en_core_web_sm' loaded successfully!") except OSError as e: print("Failed to load spaCy model:", e)

import spacy from spacy import displacy # Load spaCy model for NER print("Loading spaCy model for Named Entity Recognition...") nlp = spacy.load("en_core_web_sm") try: nlp = spacy.load("en_core_web_sm") print("spaCy model 'en_core_web_sm' loaded successfully!") except OSError as e: print("Failed to load spaCy model:", e)

import spacy from spacy import displacy # Load spaCy model for NER print("Loading spaCy model for Named Entity Recognition...") nlp = spacy.load("en_core_web_sm") try: nlp = spacy.load("en_core_web_sm") print("spaCy model 'en_core_web_sm' loaded successfully!") except OSError as e: print("Failed to load spaCy model:", e)

Loading spaCy model for Named Entity Recognition... spaCy model 'en_core_web_sm' loaded successfully

Loading spaCy model for Named Entity Recognition... spaCy model 'en_core_web_sm' loaded successfully

Loading spaCy model for Named Entity Recognition... spaCy model 'en_core_web_sm' loaded successfully

Loading spaCy model for Named Entity Recognition... spaCy model 'en_core_web_sm' loaded successfully

2.3 Preparing the Dataset for the Knowledge Base

So far, we have got a raw collection of email messages, but we need a dataset to created a knowledge base from. In this dataset, we want to have natural language texts for soft semantic search and named attributes for hard filtering of data rows.

The first step in preparing a dataset for a KB is cleaning it up and making sure we have a unique ID column. The second step is extracting the named entities from the cleaned records. We will perform both steps in the below cell:

MAX_EMAILS_TO_PROCESS = 500_000 MIN_BODY_SIZE_CHARS = 50 import json from tqdm import tqdm def clean_email_content(text): """Clean and prepare email text for processing""" if pd.isna(text) or text == '': return "" # Convert to string and clean text = str(text) # Remove excessive whitespace and newlines text = re.sub(r'\n+', ' ', text) text = re.sub(r'\s+', ' ', text) # Remove common email artifacts text = re.sub(r'-----Original Message-----.*$', '', text, flags=re.MULTILINE | re.DOTALL) text = re.sub(r'________________________________.*$', '', text, flags=re.MULTILINE | re.DOTALL) return text.strip() def extract_entities_with_ner(text, nlp_model): """Extract named entities using spaCy NER""" if not text or len(text.strip()) == 0: return {} # Limit text length to avoid memory issues text = text[:5000] if len(text) > 5000 else text try: doc = nlp_model(text) entities = { 'persons': [], 'organizations': [], 'locations': [], 'money': [], 'dates': [], 'events': [], 'products': [] } for ent in doc.ents: entity_text = ent.text.strip() if len(entity_text) < 2: # Skip very short entities continue if ent.label_ == "PERSON": entities['persons'].append(entity_text) elif ent.label_ == "ORG": entities['organizations'].append(entity_text) elif ent.label_ in ["GPE", "LOC"]: # Geopolitical entities, locations entities['locations'].append(entity_text) elif ent.label_ == "MONEY": entities['money'].append(entity_text) elif ent.label_ == "DATE": entities['dates'].append(entity_text) elif ent.label_ == "EVENT": entities['events'].append(entity_text) elif ent.label_ == "PRODUCT": entities['products'].append(entity_text) # Remove duplicates and limit to top entities for key in entities: entities[key] = list(set(entities[key]))[:5] # Max 5 entities per type return entities except Exception as e: print(f"Error processing text: {e}") return {} # Use the real Enron dataset that was loaded earlier print(f"📧 Working with real Enron dataset: {df.shape[0]} emails") print(f"Dataset columns: {df.columns.tolist()}") # Sample a reasonable subset for this tutorial (full dataset is very large) print("Sampling real Enron emails for processing...") df_sample = df.sample(n=MAX_EMAILS_TO_PROCESS, random_state=42).reset_index(drop=True) # Process the real emails and extract entities print("Processing real Enron emails and extracting entities...") processed_emails = [] for idx, row in tqdm(df_sample.iterrows(), total=len(df_sample), desc="Processing emails"): # Get the raw email content from 'text' column email_content = row['text'] if not email_content or len(str(email_content)) < 100: continue # Parse the email using the function from Cell 3 parsed = parse_email_text(email_content) # Clean the content subject = clean_email_content(parsed['subject']) body = clean_email_content(parsed['body']) if len(body) < MIN_BODY_SIZE_CHARS: # Skip very short emails continue # Extract entities from both subject and content full_text = f"{subject} {body}" entities = extract_entities_with_ner(full_text, nlp) # Create email ID email_id = f"email_{idx:06d}" processed_email = { 'email_id': email_id, 'from_address': parsed['from'][:100] if parsed['from'] else '', # Limit length 'to_address': parsed['to'][:100] if parsed['to'] else '', # Limit length 'date_sent': parsed['date'][:50] if parsed['date'] else '', # Limit length 'subject': subject, 'content': body, 'persons': ', '.join(entities['persons']) if entities['persons'] else '', 'organizations': ', '.join(entities['organizations']) if entities['organizations'] else '', 'locations': ', '.join(entities['locations']) if entities['locations'] else '', 'money_amounts': ', '.join(entities['money']) if entities['money'] else '', 'dates_mentioned': ', '.join(entities['dates']) if entities['dates'] else '', 'events': ', '.join(entities['events']) if entities['events'] else '', 'products': ', '.join(entities['products']) if entities['products'] else '', 'content_length': len(body), 'entity_count': sum(len(v) for v in entities.values()) } processed_emails.append(processed_email) # Convert to DataFrame df_processed = pd.DataFrame(processed_emails) print(f"\n✅ Processed {len(df_processed)} real Enron emails with entities extracted")

MAX_EMAILS_TO_PROCESS = 500_000 MIN_BODY_SIZE_CHARS = 50 import json from tqdm import tqdm def clean_email_content(text): """Clean and prepare email text for processing""" if pd.isna(text) or text == '': return "" # Convert to string and clean text = str(text) # Remove excessive whitespace and newlines text = re.sub(r'\n+', ' ', text) text = re.sub(r'\s+', ' ', text) # Remove common email artifacts text = re.sub(r'-----Original Message-----.*$', '', text, flags=re.MULTILINE | re.DOTALL) text = re.sub(r'________________________________.*$', '', text, flags=re.MULTILINE | re.DOTALL) return text.strip() def extract_entities_with_ner(text, nlp_model): """Extract named entities using spaCy NER""" if not text or len(text.strip()) == 0: return {} # Limit text length to avoid memory issues text = text[:5000] if len(text) > 5000 else text try: doc = nlp_model(text) entities = { 'persons': [], 'organizations': [], 'locations': [], 'money': [], 'dates': [], 'events': [], 'products': [] } for ent in doc.ents: entity_text = ent.text.strip() if len(entity_text) < 2: # Skip very short entities continue if ent.label_ == "PERSON": entities['persons'].append(entity_text) elif ent.label_ == "ORG": entities['organizations'].append(entity_text) elif ent.label_ in ["GPE", "LOC"]: # Geopolitical entities, locations entities['locations'].append(entity_text) elif ent.label_ == "MONEY": entities['money'].append(entity_text) elif ent.label_ == "DATE": entities['dates'].append(entity_text) elif ent.label_ == "EVENT": entities['events'].append(entity_text) elif ent.label_ == "PRODUCT": entities['products'].append(entity_text) # Remove duplicates and limit to top entities for key in entities: entities[key] = list(set(entities[key]))[:5] # Max 5 entities per type return entities except Exception as e: print(f"Error processing text: {e}") return {} # Use the real Enron dataset that was loaded earlier print(f"📧 Working with real Enron dataset: {df.shape[0]} emails") print(f"Dataset columns: {df.columns.tolist()}") # Sample a reasonable subset for this tutorial (full dataset is very large) print("Sampling real Enron emails for processing...") df_sample = df.sample(n=MAX_EMAILS_TO_PROCESS, random_state=42).reset_index(drop=True) # Process the real emails and extract entities print("Processing real Enron emails and extracting entities...") processed_emails = [] for idx, row in tqdm(df_sample.iterrows(), total=len(df_sample), desc="Processing emails"): # Get the raw email content from 'text' column email_content = row['text'] if not email_content or len(str(email_content)) < 100: continue # Parse the email using the function from Cell 3 parsed = parse_email_text(email_content) # Clean the content subject = clean_email_content(parsed['subject']) body = clean_email_content(parsed['body']) if len(body) < MIN_BODY_SIZE_CHARS: # Skip very short emails continue # Extract entities from both subject and content full_text = f"{subject} {body}" entities = extract_entities_with_ner(full_text, nlp) # Create email ID email_id = f"email_{idx:06d}" processed_email = { 'email_id': email_id, 'from_address': parsed['from'][:100] if parsed['from'] else '', # Limit length 'to_address': parsed['to'][:100] if parsed['to'] else '', # Limit length 'date_sent': parsed['date'][:50] if parsed['date'] else '', # Limit length 'subject': subject, 'content': body, 'persons': ', '.join(entities['persons']) if entities['persons'] else '', 'organizations': ', '.join(entities['organizations']) if entities['organizations'] else '', 'locations': ', '.join(entities['locations']) if entities['locations'] else '', 'money_amounts': ', '.join(entities['money']) if entities['money'] else '', 'dates_mentioned': ', '.join(entities['dates']) if entities['dates'] else '', 'events': ', '.join(entities['events']) if entities['events'] else '', 'products': ', '.join(entities['products']) if entities['products'] else '', 'content_length': len(body), 'entity_count': sum(len(v) for v in entities.values()) } processed_emails.append(processed_email) # Convert to DataFrame df_processed = pd.DataFrame(processed_emails) print(f"\n✅ Processed {len(df_processed)} real Enron emails with entities extracted")

MAX_EMAILS_TO_PROCESS = 500_000 MIN_BODY_SIZE_CHARS = 50 import json from tqdm import tqdm def clean_email_content(text): """Clean and prepare email text for processing""" if pd.isna(text) or text == '': return "" # Convert to string and clean text = str(text) # Remove excessive whitespace and newlines text = re.sub(r'\n+', ' ', text) text = re.sub(r'\s+', ' ', text) # Remove common email artifacts text = re.sub(r'-----Original Message-----.*$', '', text, flags=re.MULTILINE | re.DOTALL) text = re.sub(r'________________________________.*$', '', text, flags=re.MULTILINE | re.DOTALL) return text.strip() def extract_entities_with_ner(text, nlp_model): """Extract named entities using spaCy NER""" if not text or len(text.strip()) == 0: return {} # Limit text length to avoid memory issues text = text[:5000] if len(text) > 5000 else text try: doc = nlp_model(text) entities = { 'persons': [], 'organizations': [], 'locations': [], 'money': [], 'dates': [], 'events': [], 'products': [] } for ent in doc.ents: entity_text = ent.text.strip() if len(entity_text) < 2: # Skip very short entities continue if ent.label_ == "PERSON": entities['persons'].append(entity_text) elif ent.label_ == "ORG": entities['organizations'].append(entity_text) elif ent.label_ in ["GPE", "LOC"]: # Geopolitical entities, locations entities['locations'].append(entity_text) elif ent.label_ == "MONEY": entities['money'].append(entity_text) elif ent.label_ == "DATE": entities['dates'].append(entity_text) elif ent.label_ == "EVENT": entities['events'].append(entity_text) elif ent.label_ == "PRODUCT": entities['products'].append(entity_text) # Remove duplicates and limit to top entities for key in entities: entities[key] = list(set(entities[key]))[:5] # Max 5 entities per type return entities except Exception as e: print(f"Error processing text: {e}") return {} # Use the real Enron dataset that was loaded earlier print(f"📧 Working with real Enron dataset: {df.shape[0]} emails") print(f"Dataset columns: {df.columns.tolist()}") # Sample a reasonable subset for this tutorial (full dataset is very large) print("Sampling real Enron emails for processing...") df_sample = df.sample(n=MAX_EMAILS_TO_PROCESS, random_state=42).reset_index(drop=True) # Process the real emails and extract entities print("Processing real Enron emails and extracting entities...") processed_emails = [] for idx, row in tqdm(df_sample.iterrows(), total=len(df_sample), desc="Processing emails"): # Get the raw email content from 'text' column email_content = row['text'] if not email_content or len(str(email_content)) < 100: continue # Parse the email using the function from Cell 3 parsed = parse_email_text(email_content) # Clean the content subject = clean_email_content(parsed['subject']) body = clean_email_content(parsed['body']) if len(body) < MIN_BODY_SIZE_CHARS: # Skip very short emails continue # Extract entities from both subject and content full_text = f"{subject} {body}" entities = extract_entities_with_ner(full_text, nlp) # Create email ID email_id = f"email_{idx:06d}" processed_email = { 'email_id': email_id, 'from_address': parsed['from'][:100] if parsed['from'] else '', # Limit length 'to_address': parsed['to'][:100] if parsed['to'] else '', # Limit length 'date_sent': parsed['date'][:50] if parsed['date'] else '', # Limit length 'subject': subject, 'content': body, 'persons': ', '.join(entities['persons']) if entities['persons'] else '', 'organizations': ', '.join(entities['organizations']) if entities['organizations'] else '', 'locations': ', '.join(entities['locations']) if entities['locations'] else '', 'money_amounts': ', '.join(entities['money']) if entities['money'] else '', 'dates_mentioned': ', '.join(entities['dates']) if entities['dates'] else '', 'events': ', '.join(entities['events']) if entities['events'] else '', 'products': ', '.join(entities['products']) if entities['products'] else '', 'content_length': len(body), 'entity_count': sum(len(v) for v in entities.values()) } processed_emails.append(processed_email) # Convert to DataFrame df_processed = pd.DataFrame(processed_emails) print(f"\n✅ Processed {len(df_processed)} real Enron emails with entities extracted")

MAX_EMAILS_TO_PROCESS = 500_000 MIN_BODY_SIZE_CHARS = 50 import json from tqdm import tqdm def clean_email_content(text): """Clean and prepare email text for processing""" if pd.isna(text) or text == '': return "" # Convert to string and clean text = str(text) # Remove excessive whitespace and newlines text = re.sub(r'\n+', ' ', text) text = re.sub(r'\s+', ' ', text) # Remove common email artifacts text = re.sub(r'-----Original Message-----.*$', '', text, flags=re.MULTILINE | re.DOTALL) text = re.sub(r'________________________________.*$', '', text, flags=re.MULTILINE | re.DOTALL) return text.strip() def extract_entities_with_ner(text, nlp_model): """Extract named entities using spaCy NER""" if not text or len(text.strip()) == 0: return {} # Limit text length to avoid memory issues text = text[:5000] if len(text) > 5000 else text try: doc = nlp_model(text) entities = { 'persons': [], 'organizations': [], 'locations': [], 'money': [], 'dates': [], 'events': [], 'products': [] } for ent in doc.ents: entity_text = ent.text.strip() if len(entity_text) < 2: # Skip very short entities continue if ent.label_ == "PERSON": entities['persons'].append(entity_text) elif ent.label_ == "ORG": entities['organizations'].append(entity_text) elif ent.label_ in ["GPE", "LOC"]: # Geopolitical entities, locations entities['locations'].append(entity_text) elif ent.label_ == "MONEY": entities['money'].append(entity_text) elif ent.label_ == "DATE": entities['dates'].append(entity_text) elif ent.label_ == "EVENT": entities['events'].append(entity_text) elif ent.label_ == "PRODUCT": entities['products'].append(entity_text) # Remove duplicates and limit to top entities for key in entities: entities[key] = list(set(entities[key]))[:5] # Max 5 entities per type return entities except Exception as e: print(f"Error processing text: {e}") return {} # Use the real Enron dataset that was loaded earlier print(f"📧 Working with real Enron dataset: {df.shape[0]} emails") print(f"Dataset columns: {df.columns.tolist()}") # Sample a reasonable subset for this tutorial (full dataset is very large) print("Sampling real Enron emails for processing...") df_sample = df.sample(n=MAX_EMAILS_TO_PROCESS, random_state=42).reset_index(drop=True) # Process the real emails and extract entities print("Processing real Enron emails and extracting entities...") processed_emails = [] for idx, row in tqdm(df_sample.iterrows(), total=len(df_sample), desc="Processing emails"): # Get the raw email content from 'text' column email_content = row['text'] if not email_content or len(str(email_content)) < 100: continue # Parse the email using the function from Cell 3 parsed = parse_email_text(email_content) # Clean the content subject = clean_email_content(parsed['subject']) body = clean_email_content(parsed['body']) if len(body) < MIN_BODY_SIZE_CHARS: # Skip very short emails continue # Extract entities from both subject and content full_text = f"{subject} {body}" entities = extract_entities_with_ner(full_text, nlp) # Create email ID email_id = f"email_{idx:06d}" processed_email = { 'email_id': email_id, 'from_address': parsed['from'][:100] if parsed['from'] else '', # Limit length 'to_address': parsed['to'][:100] if parsed['to'] else '', # Limit length 'date_sent': parsed['date'][:50] if parsed['date'] else '', # Limit length 'subject': subject, 'content': body, 'persons': ', '.join(entities['persons']) if entities['persons'] else '', 'organizations': ', '.join(entities['organizations']) if entities['organizations'] else '', 'locations': ', '.join(entities['locations']) if entities['locations'] else '', 'money_amounts': ', '.join(entities['money']) if entities['money'] else '', 'dates_mentioned': ', '.join(entities['dates']) if entities['dates'] else '', 'events': ', '.join(entities['events']) if entities['events'] else '', 'products': ', '.join(entities['products']) if entities['products'] else '', 'content_length': len(body), 'entity_count': sum(len(v) for v in entities.values()) } processed_emails.append(processed_email) # Convert to DataFrame df_processed = pd.DataFrame(processed_emails) print(f"\n✅ Processed {len(df_processed)} real Enron emails with entities extracted")

📧 Working with real Enron dataset: 535703 emails Dataset columns: ['text'] Sampling real Enron emails for processing... Processing real Enron emails and extracting entities... Processing emails: 100%|███████████████████████████████████████████████| 500000/500000 [2:55:44<00:00, 47.42it/s] ✅ Processed 453905 real Enron emails with entities extracted

📧 Working with real Enron dataset: 535703 emails Dataset columns: ['text'] Sampling real Enron emails for processing... Processing real Enron emails and extracting entities... Processing emails: 100%|███████████████████████████████████████████████| 500000/500000 [2:55:44<00:00, 47.42it/s] ✅ Processed 453905 real Enron emails with entities extracted

📧 Working with real Enron dataset: 535703 emails Dataset columns: ['text'] Sampling real Enron emails for processing... Processing real Enron emails and extracting entities... Processing emails: 100%|███████████████████████████████████████████████| 500000/500000 [2:55:44<00:00, 47.42it/s] ✅ Processed 453905 real Enron emails with entities extracted

📧 Working with real Enron dataset: 535703 emails Dataset columns: ['text'] Sampling real Enron emails for processing... Processing real Enron emails and extracting entities... Processing emails: 100%|███████████████████████████████████████████████| 500000/500000 [2:55:44<00:00, 47.42it/s] ✅ Processed 453905 real Enron emails with entities extracted

We now have a Pandas dataframe containing, for each email, its text and the extracted attributes. Let's look at some of them and some stats:

# Show a sample of processed data print(f"\n📊 Sample of Enron emails processed:") print("="*120) for idx, row in df_processed.head(5).iterrows(): print(f"\n📧 Real Email #{idx+1}") print(f"🆔 ID: {row['email_id']}") print(f"👤 From: {row['from_address']}") print(f"👤 To: {row['to_address']}") print(f"📅 Date: {row['date_sent']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons'] if row['persons'] else 'None detected'}") print(f"🏢 Organizations: {row['organizations'] if row['organizations'] else 'None detected'}") print(f"📍 Locations: {row['locations'] if row['locations'] else 'None detected'}") print(f"💰 Money: {row['money_amounts'] if row['money_amounts'] else 'None detected'}") print(f"💬 Content Preview: {row['content'][:200]}{'...' if len(row['content']) > 200 else ''}") print("-" * 100) # Show statistics on real data print(f"\n📈 Real Data Processing Statistics:") print(f"• Total real emails processed: {len(df_processed)}") print(f"• Average content length: {df_processed['content_length'].mean():.0f} characters") print(f"• Average entities per email: {df_processed['entity_count'].mean():.1f}") print(f"• Emails with persons mentioned: {len(df_processed[df_processed['persons'] != ''])}") print(f"• Emails with organizations mentioned: {len(df_processed[df_processed['organizations'] != ''])}") print(f"• Emails with money amounts: {len(df_processed[df_processed['money_amounts'] != ''])}") # Show some interesting real examples print(f"\n🔍 Most interesting real emails (by entity count):") top_emails = df_processed.nlargest(3, 'entity_count') for idx, row in top_emails.iterrows(): print(f"\n📧 High-entity email from {row['from_address']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons']}") print(f"🏢 Organizations: {row['organizations']}") print(f"💰 Money: {row['money_amounts']}")

# Show a sample of processed data print(f"\n📊 Sample of Enron emails processed:") print("="*120) for idx, row in df_processed.head(5).iterrows(): print(f"\n📧 Real Email #{idx+1}") print(f"🆔 ID: {row['email_id']}") print(f"👤 From: {row['from_address']}") print(f"👤 To: {row['to_address']}") print(f"📅 Date: {row['date_sent']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons'] if row['persons'] else 'None detected'}") print(f"🏢 Organizations: {row['organizations'] if row['organizations'] else 'None detected'}") print(f"📍 Locations: {row['locations'] if row['locations'] else 'None detected'}") print(f"💰 Money: {row['money_amounts'] if row['money_amounts'] else 'None detected'}") print(f"💬 Content Preview: {row['content'][:200]}{'...' if len(row['content']) > 200 else ''}") print("-" * 100) # Show statistics on real data print(f"\n📈 Real Data Processing Statistics:") print(f"• Total real emails processed: {len(df_processed)}") print(f"• Average content length: {df_processed['content_length'].mean():.0f} characters") print(f"• Average entities per email: {df_processed['entity_count'].mean():.1f}") print(f"• Emails with persons mentioned: {len(df_processed[df_processed['persons'] != ''])}") print(f"• Emails with organizations mentioned: {len(df_processed[df_processed['organizations'] != ''])}") print(f"• Emails with money amounts: {len(df_processed[df_processed['money_amounts'] != ''])}") # Show some interesting real examples print(f"\n🔍 Most interesting real emails (by entity count):") top_emails = df_processed.nlargest(3, 'entity_count') for idx, row in top_emails.iterrows(): print(f"\n📧 High-entity email from {row['from_address']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons']}") print(f"🏢 Organizations: {row['organizations']}") print(f"💰 Money: {row['money_amounts']}")

# Show a sample of processed data print(f"\n📊 Sample of Enron emails processed:") print("="*120) for idx, row in df_processed.head(5).iterrows(): print(f"\n📧 Real Email #{idx+1}") print(f"🆔 ID: {row['email_id']}") print(f"👤 From: {row['from_address']}") print(f"👤 To: {row['to_address']}") print(f"📅 Date: {row['date_sent']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons'] if row['persons'] else 'None detected'}") print(f"🏢 Organizations: {row['organizations'] if row['organizations'] else 'None detected'}") print(f"📍 Locations: {row['locations'] if row['locations'] else 'None detected'}") print(f"💰 Money: {row['money_amounts'] if row['money_amounts'] else 'None detected'}") print(f"💬 Content Preview: {row['content'][:200]}{'...' if len(row['content']) > 200 else ''}") print("-" * 100) # Show statistics on real data print(f"\n📈 Real Data Processing Statistics:") print(f"• Total real emails processed: {len(df_processed)}") print(f"• Average content length: {df_processed['content_length'].mean():.0f} characters") print(f"• Average entities per email: {df_processed['entity_count'].mean():.1f}") print(f"• Emails with persons mentioned: {len(df_processed[df_processed['persons'] != ''])}") print(f"• Emails with organizations mentioned: {len(df_processed[df_processed['organizations'] != ''])}") print(f"• Emails with money amounts: {len(df_processed[df_processed['money_amounts'] != ''])}") # Show some interesting real examples print(f"\n🔍 Most interesting real emails (by entity count):") top_emails = df_processed.nlargest(3, 'entity_count') for idx, row in top_emails.iterrows(): print(f"\n📧 High-entity email from {row['from_address']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons']}") print(f"🏢 Organizations: {row['organizations']}") print(f"💰 Money: {row['money_amounts']}")

# Show a sample of processed data print(f"\n📊 Sample of Enron emails processed:") print("="*120) for idx, row in df_processed.head(5).iterrows(): print(f"\n📧 Real Email #{idx+1}") print(f"🆔 ID: {row['email_id']}") print(f"👤 From: {row['from_address']}") print(f"👤 To: {row['to_address']}") print(f"📅 Date: {row['date_sent']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons'] if row['persons'] else 'None detected'}") print(f"🏢 Organizations: {row['organizations'] if row['organizations'] else 'None detected'}") print(f"📍 Locations: {row['locations'] if row['locations'] else 'None detected'}") print(f"💰 Money: {row['money_amounts'] if row['money_amounts'] else 'None detected'}") print(f"💬 Content Preview: {row['content'][:200]}{'...' if len(row['content']) > 200 else ''}") print("-" * 100) # Show statistics on real data print(f"\n📈 Real Data Processing Statistics:") print(f"• Total real emails processed: {len(df_processed)}") print(f"• Average content length: {df_processed['content_length'].mean():.0f} characters") print(f"• Average entities per email: {df_processed['entity_count'].mean():.1f}") print(f"• Emails with persons mentioned: {len(df_processed[df_processed['persons'] != ''])}") print(f"• Emails with organizations mentioned: {len(df_processed[df_processed['organizations'] != ''])}") print(f"• Emails with money amounts: {len(df_processed[df_processed['money_amounts'] != ''])}") # Show some interesting real examples print(f"\n🔍 Most interesting real emails (by entity count):") top_emails = df_processed.nlargest(3, 'entity_count') for idx, row in top_emails.iterrows(): print(f"\n📧 High-entity email from {row['from_address']}") print(f"📝 Subject: {row['subject']}") print(f"👥 Persons: {row['persons']}") print(f"🏢 Organizations: {row['organizations']}") print(f"💰 Money: {row['money_amounts']}")

📊 Sample of Enron emails processed: ======================================================================================================================== 📧 Real Email #1 🆔 ID: email_000000 👤 From: daren.farmer@enron.com 👤 To: susan.trevino@enron.com 📅 Date: Fri, 10 Dec 1999 08:33:00 -0800 (PST) 📝 Subject: Re: Meter 5892 - UA4 1996 and 1997 Logistics Issues 👥 Persons: Daren J Farmer/HOU, Susan, Meter 5892 - UA4 1996, Mary M Smith/HOU, Susan D Trevino 🏢 Organizations: Volume Management 📍 Locations: UA4 💰 Money: None detected 💬 Content Preview: Susan, I need you to do the research on this meter. You will need to review the various scheduling systems to see how this was handled prior to 2/96. You can also check with Volume Management to see i... ---------------------------------------------------------------------------------------------------- 📧 Real Email #2 🆔 ID: email_000001 👤 From: eric.bass@enron.com 👤 To: jason.bass2@compaq.com, phillip.love@enron.com, bryan.hull@enron.com, 📅 Date: Fri, 18 Aug 2000 05:03:00 -0700 (PDT) 📝 Subject: DRAFT 👥 Persons: Bcc 🏢 Organizations: None detected 📍 Locations: Rice Village 💰 Money: None detected 💬 Content Preview: Cc: timothy.blanchard@enron.com Bcc: timothy.blanchard@enron.com Remember, the draft is this Sunday at 11:45 am at BW-3 in Rice Village. Please try to be there on time so we can start promptly. -Eric ---------------------------------------------------------------------------------------------------- 📧 Real Email #3 🆔 ID: email_000003 👤 From: larry.campbell@enron.com 👤 To: pdrumm@csc.com 📅 Date: Mon, 31 Jul 2000 09:53:00 -0700 (PDT) 📝 Subject: More July CED-PGE 👥 Persons: Susan Fick, Patty 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Patty Could you please forward this to Susan Fick. I don't have her e-mail. LC ---------------------------------------------------------------------------------------------------- 📧 Real Email #4 🆔 ID: email_000004 👤 From: phillip.allen@enron.com 👤 To: christi.nicolay@enron.com, james.steffes@enron.com, jeff.dasovich@enron.com, 📅 Date: Wed, 13 Dec 2000 07:04:00 -0800 (PST) 📝 Subject: Body: 👥 Persons: None detected 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Attached are two files that illustrate the following: As prices rose, supply increased and demand decreased. Now prices are beginning to fall in response these market responses. ---------------------------------------------------------------------------------------------------- 📧 Real Email #5 🆔 ID: email_000005 👤 From: kurt.lindahl@elpaso.com 👤 To: atsm@chewon.com, aarmstrong@sempratrading.com, neilaj@texaco.com, 📅 Date: Tue, 31 Jul 2001 08:28:00 -0700 (PDT) 📝 Subject: El Paso 👥 Persons: Origination El Paso, Tx 77252-2511, Kurt Lindahl Sr., Rob Bryngelson 🏢 Organizations: the ElPaso Corporation, El Paso, Global LNG Division, El Paso Merchant Energy, Business Development 📍 Locations: Houston 💰 Money: None detected 💬 Content Preview: Dear Friends and Colleagues, This note is to inform you that I have joined El Paso Merchant Energy in their Global LNG Division reporting to Rob Bryngelson, Managing Director, Business Development. Pl... ---------------------------------------------------------------------------------------------------- 📈 Real Data Processing Statistics: • Total real emails processed: 453905 • Average content length: 1474 characters • Average entities per email: 8.1 • Emails with persons mentioned: 383312 • Emails with organizations mentioned: 363549 • Emails with money amounts: 63303 🔍 Most interesting real emails (by entity count): 📧 High-entity email from tradersummary@syncrasy.com 📝 Subject: Syncrasy Daily Trader Summary for Wed, Jan 16, 2002 👥 Persons: Data, NC ERCOT(SP, Max, Aquila, Andy Weingarten 🏢 Organizations: Trader Summary, ERCOT(SP, SPP(= SP, Average-Daily Maximum Temperature', MAPP(HP 💰 Money: 37 -1 MAIN(CTR, 50,000, 43 -1 MAIN(CTR, 36 -1 MAIN(CTR, 40 -1 WSCC(RK 📧 High-entity email from lucky@icelandair.is 📝 Subject: Iceland Food Festival 👥 Persons: Hotel Klopp, Rich, Mar 1 - National Beer Day, David Rosengarten, Subject 🏢 Organizations: Reykjav?k/K?pavogur, Party, Party Gourmet Dinner, BWI, SCENIC SIGHTSEEING Blue Lagoon 💰 Money: 65, 66, 69, 50, 55 📧 High-entity email from truorange@aol.com 📝 Subject: True Orange, November 27, Part 2 👥 Persons: Sooners, Jody Conradt, ESPN, Harris, Northwestern 🏢 Organizations: Oregon State, K-State, Texas A&M, SEC, Big East 💰 Money: $1.1 million, $1.9 million, $2.5 million, $1.2 million, 750,000

📊 Sample of Enron emails processed: ======================================================================================================================== 📧 Real Email #1 🆔 ID: email_000000 👤 From: daren.farmer@enron.com 👤 To: susan.trevino@enron.com 📅 Date: Fri, 10 Dec 1999 08:33:00 -0800 (PST) 📝 Subject: Re: Meter 5892 - UA4 1996 and 1997 Logistics Issues 👥 Persons: Daren J Farmer/HOU, Susan, Meter 5892 - UA4 1996, Mary M Smith/HOU, Susan D Trevino 🏢 Organizations: Volume Management 📍 Locations: UA4 💰 Money: None detected 💬 Content Preview: Susan, I need you to do the research on this meter. You will need to review the various scheduling systems to see how this was handled prior to 2/96. You can also check with Volume Management to see i... ---------------------------------------------------------------------------------------------------- 📧 Real Email #2 🆔 ID: email_000001 👤 From: eric.bass@enron.com 👤 To: jason.bass2@compaq.com, phillip.love@enron.com, bryan.hull@enron.com, 📅 Date: Fri, 18 Aug 2000 05:03:00 -0700 (PDT) 📝 Subject: DRAFT 👥 Persons: Bcc 🏢 Organizations: None detected 📍 Locations: Rice Village 💰 Money: None detected 💬 Content Preview: Cc: timothy.blanchard@enron.com Bcc: timothy.blanchard@enron.com Remember, the draft is this Sunday at 11:45 am at BW-3 in Rice Village. Please try to be there on time so we can start promptly. -Eric ---------------------------------------------------------------------------------------------------- 📧 Real Email #3 🆔 ID: email_000003 👤 From: larry.campbell@enron.com 👤 To: pdrumm@csc.com 📅 Date: Mon, 31 Jul 2000 09:53:00 -0700 (PDT) 📝 Subject: More July CED-PGE 👥 Persons: Susan Fick, Patty 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Patty Could you please forward this to Susan Fick. I don't have her e-mail. LC ---------------------------------------------------------------------------------------------------- 📧 Real Email #4 🆔 ID: email_000004 👤 From: phillip.allen@enron.com 👤 To: christi.nicolay@enron.com, james.steffes@enron.com, jeff.dasovich@enron.com, 📅 Date: Wed, 13 Dec 2000 07:04:00 -0800 (PST) 📝 Subject: Body: 👥 Persons: None detected 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Attached are two files that illustrate the following: As prices rose, supply increased and demand decreased. Now prices are beginning to fall in response these market responses. ---------------------------------------------------------------------------------------------------- 📧 Real Email #5 🆔 ID: email_000005 👤 From: kurt.lindahl@elpaso.com 👤 To: atsm@chewon.com, aarmstrong@sempratrading.com, neilaj@texaco.com, 📅 Date: Tue, 31 Jul 2001 08:28:00 -0700 (PDT) 📝 Subject: El Paso 👥 Persons: Origination El Paso, Tx 77252-2511, Kurt Lindahl Sr., Rob Bryngelson 🏢 Organizations: the ElPaso Corporation, El Paso, Global LNG Division, El Paso Merchant Energy, Business Development 📍 Locations: Houston 💰 Money: None detected 💬 Content Preview: Dear Friends and Colleagues, This note is to inform you that I have joined El Paso Merchant Energy in their Global LNG Division reporting to Rob Bryngelson, Managing Director, Business Development. Pl... ---------------------------------------------------------------------------------------------------- 📈 Real Data Processing Statistics: • Total real emails processed: 453905 • Average content length: 1474 characters • Average entities per email: 8.1 • Emails with persons mentioned: 383312 • Emails with organizations mentioned: 363549 • Emails with money amounts: 63303 🔍 Most interesting real emails (by entity count): 📧 High-entity email from tradersummary@syncrasy.com 📝 Subject: Syncrasy Daily Trader Summary for Wed, Jan 16, 2002 👥 Persons: Data, NC ERCOT(SP, Max, Aquila, Andy Weingarten 🏢 Organizations: Trader Summary, ERCOT(SP, SPP(= SP, Average-Daily Maximum Temperature', MAPP(HP 💰 Money: 37 -1 MAIN(CTR, 50,000, 43 -1 MAIN(CTR, 36 -1 MAIN(CTR, 40 -1 WSCC(RK 📧 High-entity email from lucky@icelandair.is 📝 Subject: Iceland Food Festival 👥 Persons: Hotel Klopp, Rich, Mar 1 - National Beer Day, David Rosengarten, Subject 🏢 Organizations: Reykjav?k/K?pavogur, Party, Party Gourmet Dinner, BWI, SCENIC SIGHTSEEING Blue Lagoon 💰 Money: 65, 66, 69, 50, 55 📧 High-entity email from truorange@aol.com 📝 Subject: True Orange, November 27, Part 2 👥 Persons: Sooners, Jody Conradt, ESPN, Harris, Northwestern 🏢 Organizations: Oregon State, K-State, Texas A&M, SEC, Big East 💰 Money: $1.1 million, $1.9 million, $2.5 million, $1.2 million, 750,000

📊 Sample of Enron emails processed: ======================================================================================================================== 📧 Real Email #1 🆔 ID: email_000000 👤 From: daren.farmer@enron.com 👤 To: susan.trevino@enron.com 📅 Date: Fri, 10 Dec 1999 08:33:00 -0800 (PST) 📝 Subject: Re: Meter 5892 - UA4 1996 and 1997 Logistics Issues 👥 Persons: Daren J Farmer/HOU, Susan, Meter 5892 - UA4 1996, Mary M Smith/HOU, Susan D Trevino 🏢 Organizations: Volume Management 📍 Locations: UA4 💰 Money: None detected 💬 Content Preview: Susan, I need you to do the research on this meter. You will need to review the various scheduling systems to see how this was handled prior to 2/96. You can also check with Volume Management to see i... ---------------------------------------------------------------------------------------------------- 📧 Real Email #2 🆔 ID: email_000001 👤 From: eric.bass@enron.com 👤 To: jason.bass2@compaq.com, phillip.love@enron.com, bryan.hull@enron.com, 📅 Date: Fri, 18 Aug 2000 05:03:00 -0700 (PDT) 📝 Subject: DRAFT 👥 Persons: Bcc 🏢 Organizations: None detected 📍 Locations: Rice Village 💰 Money: None detected 💬 Content Preview: Cc: timothy.blanchard@enron.com Bcc: timothy.blanchard@enron.com Remember, the draft is this Sunday at 11:45 am at BW-3 in Rice Village. Please try to be there on time so we can start promptly. -Eric ---------------------------------------------------------------------------------------------------- 📧 Real Email #3 🆔 ID: email_000003 👤 From: larry.campbell@enron.com 👤 To: pdrumm@csc.com 📅 Date: Mon, 31 Jul 2000 09:53:00 -0700 (PDT) 📝 Subject: More July CED-PGE 👥 Persons: Susan Fick, Patty 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Patty Could you please forward this to Susan Fick. I don't have her e-mail. LC ---------------------------------------------------------------------------------------------------- 📧 Real Email #4 🆔 ID: email_000004 👤 From: phillip.allen@enron.com 👤 To: christi.nicolay@enron.com, james.steffes@enron.com, jeff.dasovich@enron.com, 📅 Date: Wed, 13 Dec 2000 07:04:00 -0800 (PST) 📝 Subject: Body: 👥 Persons: None detected 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Attached are two files that illustrate the following: As prices rose, supply increased and demand decreased. Now prices are beginning to fall in response these market responses. ---------------------------------------------------------------------------------------------------- 📧 Real Email #5 🆔 ID: email_000005 👤 From: kurt.lindahl@elpaso.com 👤 To: atsm@chewon.com, aarmstrong@sempratrading.com, neilaj@texaco.com, 📅 Date: Tue, 31 Jul 2001 08:28:00 -0700 (PDT) 📝 Subject: El Paso 👥 Persons: Origination El Paso, Tx 77252-2511, Kurt Lindahl Sr., Rob Bryngelson 🏢 Organizations: the ElPaso Corporation, El Paso, Global LNG Division, El Paso Merchant Energy, Business Development 📍 Locations: Houston 💰 Money: None detected 💬 Content Preview: Dear Friends and Colleagues, This note is to inform you that I have joined El Paso Merchant Energy in their Global LNG Division reporting to Rob Bryngelson, Managing Director, Business Development. Pl... ---------------------------------------------------------------------------------------------------- 📈 Real Data Processing Statistics: • Total real emails processed: 453905 • Average content length: 1474 characters • Average entities per email: 8.1 • Emails with persons mentioned: 383312 • Emails with organizations mentioned: 363549 • Emails with money amounts: 63303 🔍 Most interesting real emails (by entity count): 📧 High-entity email from tradersummary@syncrasy.com 📝 Subject: Syncrasy Daily Trader Summary for Wed, Jan 16, 2002 👥 Persons: Data, NC ERCOT(SP, Max, Aquila, Andy Weingarten 🏢 Organizations: Trader Summary, ERCOT(SP, SPP(= SP, Average-Daily Maximum Temperature', MAPP(HP 💰 Money: 37 -1 MAIN(CTR, 50,000, 43 -1 MAIN(CTR, 36 -1 MAIN(CTR, 40 -1 WSCC(RK 📧 High-entity email from lucky@icelandair.is 📝 Subject: Iceland Food Festival 👥 Persons: Hotel Klopp, Rich, Mar 1 - National Beer Day, David Rosengarten, Subject 🏢 Organizations: Reykjav?k/K?pavogur, Party, Party Gourmet Dinner, BWI, SCENIC SIGHTSEEING Blue Lagoon 💰 Money: 65, 66, 69, 50, 55 📧 High-entity email from truorange@aol.com 📝 Subject: True Orange, November 27, Part 2 👥 Persons: Sooners, Jody Conradt, ESPN, Harris, Northwestern 🏢 Organizations: Oregon State, K-State, Texas A&M, SEC, Big East 💰 Money: $1.1 million, $1.9 million, $2.5 million, $1.2 million, 750,000

📊 Sample of Enron emails processed: ======================================================================================================================== 📧 Real Email #1 🆔 ID: email_000000 👤 From: daren.farmer@enron.com 👤 To: susan.trevino@enron.com 📅 Date: Fri, 10 Dec 1999 08:33:00 -0800 (PST) 📝 Subject: Re: Meter 5892 - UA4 1996 and 1997 Logistics Issues 👥 Persons: Daren J Farmer/HOU, Susan, Meter 5892 - UA4 1996, Mary M Smith/HOU, Susan D Trevino 🏢 Organizations: Volume Management 📍 Locations: UA4 💰 Money: None detected 💬 Content Preview: Susan, I need you to do the research on this meter. You will need to review the various scheduling systems to see how this was handled prior to 2/96. You can also check with Volume Management to see i... ---------------------------------------------------------------------------------------------------- 📧 Real Email #2 🆔 ID: email_000001 👤 From: eric.bass@enron.com 👤 To: jason.bass2@compaq.com, phillip.love@enron.com, bryan.hull@enron.com, 📅 Date: Fri, 18 Aug 2000 05:03:00 -0700 (PDT) 📝 Subject: DRAFT 👥 Persons: Bcc 🏢 Organizations: None detected 📍 Locations: Rice Village 💰 Money: None detected 💬 Content Preview: Cc: timothy.blanchard@enron.com Bcc: timothy.blanchard@enron.com Remember, the draft is this Sunday at 11:45 am at BW-3 in Rice Village. Please try to be there on time so we can start promptly. -Eric ---------------------------------------------------------------------------------------------------- 📧 Real Email #3 🆔 ID: email_000003 👤 From: larry.campbell@enron.com 👤 To: pdrumm@csc.com 📅 Date: Mon, 31 Jul 2000 09:53:00 -0700 (PDT) 📝 Subject: More July CED-PGE 👥 Persons: Susan Fick, Patty 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Patty Could you please forward this to Susan Fick. I don't have her e-mail. LC ---------------------------------------------------------------------------------------------------- 📧 Real Email #4 🆔 ID: email_000004 👤 From: phillip.allen@enron.com 👤 To: christi.nicolay@enron.com, james.steffes@enron.com, jeff.dasovich@enron.com, 📅 Date: Wed, 13 Dec 2000 07:04:00 -0800 (PST) 📝 Subject: Body: 👥 Persons: None detected 🏢 Organizations: None detected 📍 Locations: None detected 💰 Money: None detected 💬 Content Preview: Attached are two files that illustrate the following: As prices rose, supply increased and demand decreased. Now prices are beginning to fall in response these market responses. ---------------------------------------------------------------------------------------------------- 📧 Real Email #5 🆔 ID: email_000005 👤 From: kurt.lindahl@elpaso.com 👤 To: atsm@chewon.com, aarmstrong@sempratrading.com, neilaj@texaco.com, 📅 Date: Tue, 31 Jul 2001 08:28:00 -0700 (PDT) 📝 Subject: El Paso 👥 Persons: Origination El Paso, Tx 77252-2511, Kurt Lindahl Sr., Rob Bryngelson 🏢 Organizations: the ElPaso Corporation, El Paso, Global LNG Division, El Paso Merchant Energy, Business Development 📍 Locations: Houston 💰 Money: None detected 💬 Content Preview: Dear Friends and Colleagues, This note is to inform you that I have joined El Paso Merchant Energy in their Global LNG Division reporting to Rob Bryngelson, Managing Director, Business Development. Pl... ---------------------------------------------------------------------------------------------------- 📈 Real Data Processing Statistics: • Total real emails processed: 453905 • Average content length: 1474 characters • Average entities per email: 8.1 • Emails with persons mentioned: 383312 • Emails with organizations mentioned: 363549 • Emails with money amounts: 63303 🔍 Most interesting real emails (by entity count): 📧 High-entity email from tradersummary@syncrasy.com 📝 Subject: Syncrasy Daily Trader Summary for Wed, Jan 16, 2002 👥 Persons: Data, NC ERCOT(SP, Max, Aquila, Andy Weingarten 🏢 Organizations: Trader Summary, ERCOT(SP, SPP(= SP, Average-Daily Maximum Temperature', MAPP(HP 💰 Money: 37 -1 MAIN(CTR, 50,000, 43 -1 MAIN(CTR, 36 -1 MAIN(CTR, 40 -1 WSCC(RK 📧 High-entity email from lucky@icelandair.is 📝 Subject: Iceland Food Festival 👥 Persons: Hotel Klopp, Rich, Mar 1 - National Beer Day, David Rosengarten, Subject 🏢 Organizations: Reykjav?k/K?pavogur, Party, Party Gourmet Dinner, BWI, SCENIC SIGHTSEEING Blue Lagoon 💰 Money: 65, 66, 69, 50, 55 📧 High-entity email from truorange@aol.com 📝 Subject: True Orange, November 27, Part 2 👥 Persons: Sooners, Jody Conradt, ESPN, Harris, Northwestern 🏢 Organizations: Oregon State, K-State, Texas A&M, SEC, Big East 💰 Money: $1.1 million, $1.9 million, $2.5 million, $1.2 million, 750,000

The data looks good, so let's now save it into a CSV file that we will then load to our knowledge base:

# Save processed real data df_processed.to_csv('enron_emails_processed_real.csv', index=False) print(f"\n✅ Real Enron emails saved to 'enron_emails_processed_real.csv'")

# Save processed real data df_processed.to_csv('enron_emails_processed_real.csv', index=False) print(f"\n✅ Real Enron emails saved to 'enron_emails_processed_real.csv'")

# Save processed real data df_processed.to_csv('enron_emails_processed_real.csv', index=False) print(f"\n✅ Real Enron emails saved to 'enron_emails_processed_real.csv'")

# Save processed real data df_processed.to_csv('enron_emails_processed_real.csv', index=False) print(f"\n✅ Real Enron emails saved to 'enron_emails_processed_real.csv'")

✅ Real Enron emails saved to 'enron_emails_processed_real.csv'

✅ Real Enron emails saved to 'enron_emails_processed_real.csv'

✅ Real Enron emails saved to 'enron_emails_processed_real.csv'

✅ Real Enron emails saved to 'enron_emails_processed_real.csv'

2.3 Connecting to the Vector Store

When the user creates a MindsDB Knowledge Base, MindsDB chunks all the text fragments into pieces (chunks) and uses an external text embedding model to convert each chunk into an embedding vector. Embedding vectors are numerical arrays that have the following property: if two texts are similar semantically, then their embedding vectors are close to each other in the vector space. This allows us to compare two texts semantically by applying a mathematical operation (like cosine similarity) to two vectors to see how close they are in the vector space.

These embedding vectors need to be stored somewhere. There are various vector databases, including several open-source ones. MindsDB supports ChromaDB by default. However, ChromaDB doesn't support the "LIKE" operation, which is a standard operation in relational database SELECT queries. We will use LIKE in our tutorial; therefore, we will use a different open-source vector store, PGVector, which is part of the Postgres ecosystem.

For this tutorial, we provisioned a PGVector instance on AWS. You can install it locally too. Here's how you can do it.

Let's create a vector database enron_kb_pgvector, which will store knowledge base's embedding vectors:

# Drop an existing pgvector database if it exists try: print("🗑️ Dropping existing pgvector database...") server.query("DROP DATABASE IF EXISTS enron_kb_pgvector;").fetch() print("✅ Dropped existing database") except Exception as e: print(f"⚠️ Drop error: {e}") # Create fresh pgvector database connection try: server.query(""" CREATE DATABASE enron_kb_pgvector WITH ENGINE = 'pgvector', PARAMETERS = { "host": "c3hsmn51hjafhh.cluster-czrs8kj4isg7.us-east-1.rds.amazonaws.com", "port": 5432, "database": "df1f3i5s2jrksf", "user": "u36kd0g64092pk", "password": "pc08df7cb724a4ad6b1a8288c3666fa087f1a89c1ba5d1a555b40a8ba863672e4" }; """).fetch() print("✅ Created pgvector database connection 'enron_kb_pgvector'") except Exception as e: print(f"❌ Database connection error: {e}") raise

# Drop an existing pgvector database if it exists try: print("🗑️ Dropping existing pgvector database...") server.query("DROP DATABASE IF EXISTS enron_kb_pgvector;").fetch() print("✅ Dropped existing database") except Exception as e: print(f"⚠️ Drop error: {e}") # Create fresh pgvector database connection try: server.query(""" CREATE DATABASE enron_kb_pgvector WITH ENGINE = 'pgvector', PARAMETERS = { "host": "c3hsmn51hjafhh.cluster-czrs8kj4isg7.us-east-1.rds.amazonaws.com", "port": 5432, "database": "df1f3i5s2jrksf", "user": "u36kd0g64092pk", "password": "pc08df7cb724a4ad6b1a8288c3666fa087f1a89c1ba5d1a555b40a8ba863672e4" }; """).fetch() print("✅ Created pgvector database connection 'enron_kb_pgvector'") except Exception as e: print(f"❌ Database connection error: {e}") raise

# Drop an existing pgvector database if it exists try: print("🗑️ Dropping existing pgvector database...") server.query("DROP DATABASE IF EXISTS enron_kb_pgvector;").fetch() print("✅ Dropped existing database") except Exception as e: print(f"⚠️ Drop error: {e}") # Create fresh pgvector database connection try: server.query(""" CREATE DATABASE enron_kb_pgvector WITH ENGINE = 'pgvector', PARAMETERS = { "host": "c3hsmn51hjafhh.cluster-czrs8kj4isg7.us-east-1.rds.amazonaws.com", "port": 5432, "database": "df1f3i5s2jrksf", "user": "u36kd0g64092pk", "password": "pc08df7cb724a4ad6b1a8288c3666fa087f1a89c1ba5d1a555b40a8ba863672e4" }; """).fetch() print("✅ Created pgvector database connection 'enron_kb_pgvector'") except Exception as e: print(f"❌ Database connection error: {e}") raise

# Drop an existing pgvector database if it exists try: print("🗑️ Dropping existing pgvector database...") server.query("DROP DATABASE IF EXISTS enron_kb_pgvector;").fetch() print("✅ Dropped existing database") except Exception as e: print(f"⚠️ Drop error: {e}") # Create fresh pgvector database connection try: server.query(""" CREATE DATABASE enron_kb_pgvector WITH ENGINE = 'pgvector', PARAMETERS = { "host": "c3hsmn51hjafhh.cluster-czrs8kj4isg7.us-east-1.rds.amazonaws.com", "port": 5432, "database": "df1f3i5s2jrksf", "user": "u36kd0g64092pk", "password": "pc08df7cb724a4ad6b1a8288c3666fa087f1a89c1ba5d1a555b40a8ba863672e4" }; """).fetch() print("✅ Created pgvector database connection 'enron_kb_pgvector'") except Exception as e: print(f"❌ Database connection error: {e}") raise

🗑️ Dropping existing pgvector database... ✅ Dropped existing database ✅ Created pgvector database connection 'enron_kb_pgvector'

🗑️ Dropping existing pgvector database... ✅ Dropped existing database ✅ Created pgvector database connection 'enron_kb_pgvector'

🗑️ Dropping existing pgvector database... ✅ Dropped existing database ✅ Created pgvector database connection 'enron_kb_pgvector'

🗑️ Dropping existing pgvector database... ✅ Dropped existing database ✅ Created pgvector database connection 'enron_kb_pgvector'

2.4 Uploading the Dataset to MindsDB

Now let's connect to our local MindsDB instance and upload the dataset:

Remember, that in this tutorial, we assume that the user has a free open-source MindsDB instance running in their local environment. Please follow these steps to set it up.