Subscribe to our Blog

Subscribe to our Blog

More Posts

More Posts

Feb 2, 2026

How Web Apps Can Gain a Competitive Edge with Supabase and MindsDB

How Web Apps Can Gain a Competitive Edge with Supabase and MindsDB

Jan 27, 2026

Building a Semantic Search Knowledge Base with MindsDB

Building a Semantic Search Knowledge Base with MindsDB

Jan 27, 2026

MindsDB Product Updates - January 2026

MindsDB Product Updates - January 2026

Jan 22, 2026

W.I.S.H – Whatever I Say Happens – Programming

W.I.S.H – Whatever I Say Happens – Programming

Jan 15, 2026

Building AI-Powered Data Analytics with MindsDB: From Natural Language to Charts

Building AI-Powered Data Analytics with MindsDB: From Natural Language to Charts

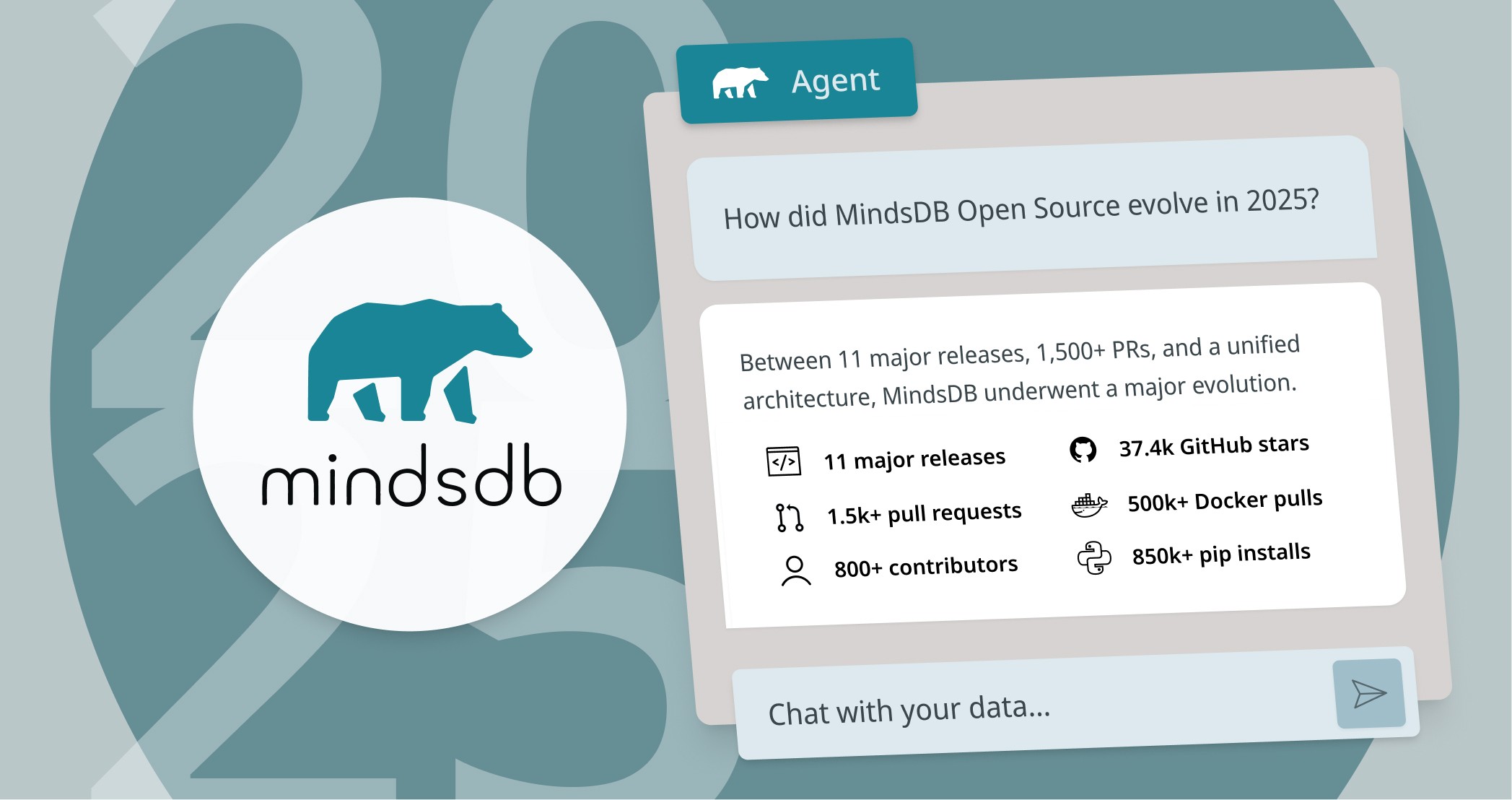

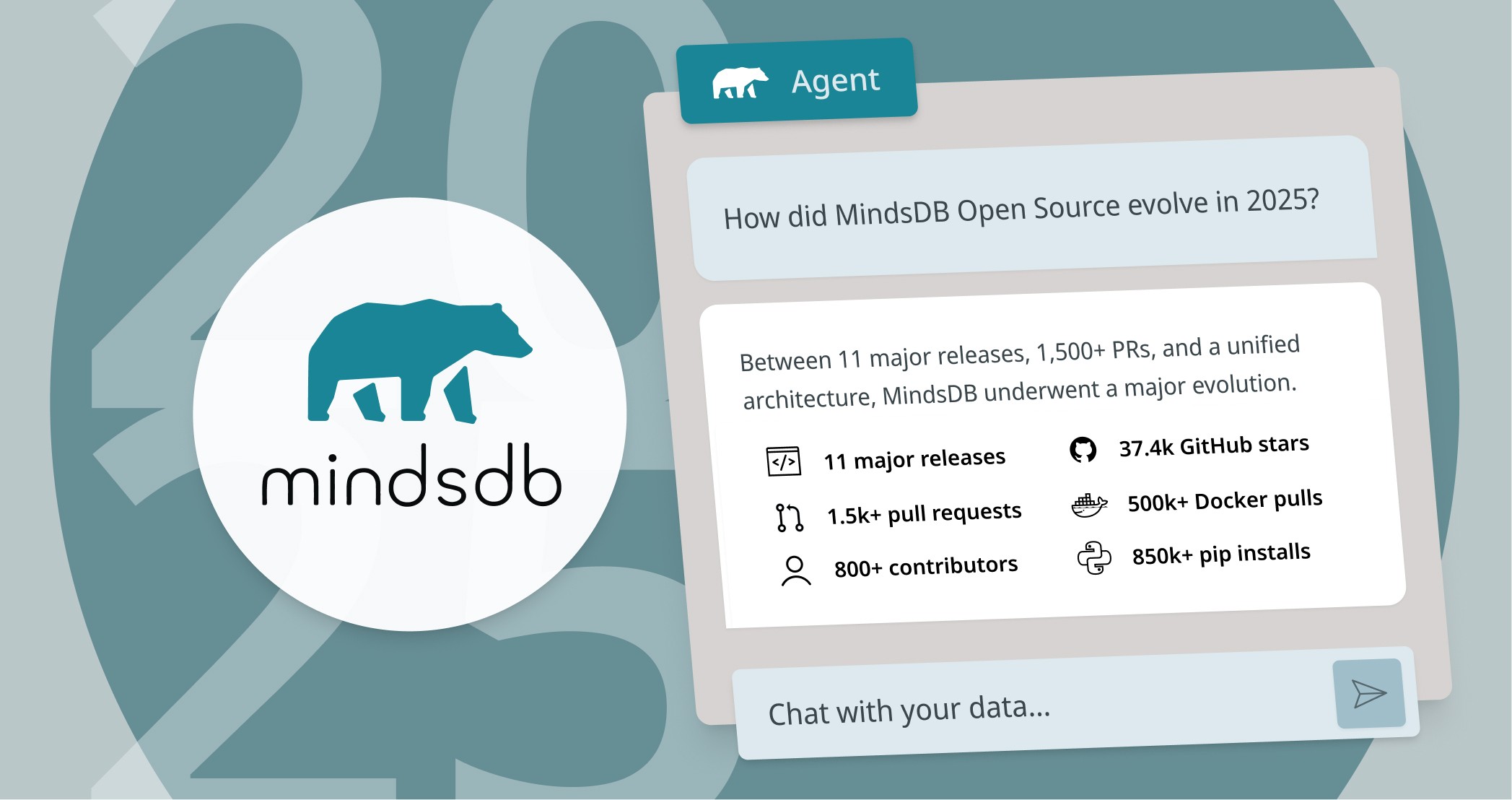

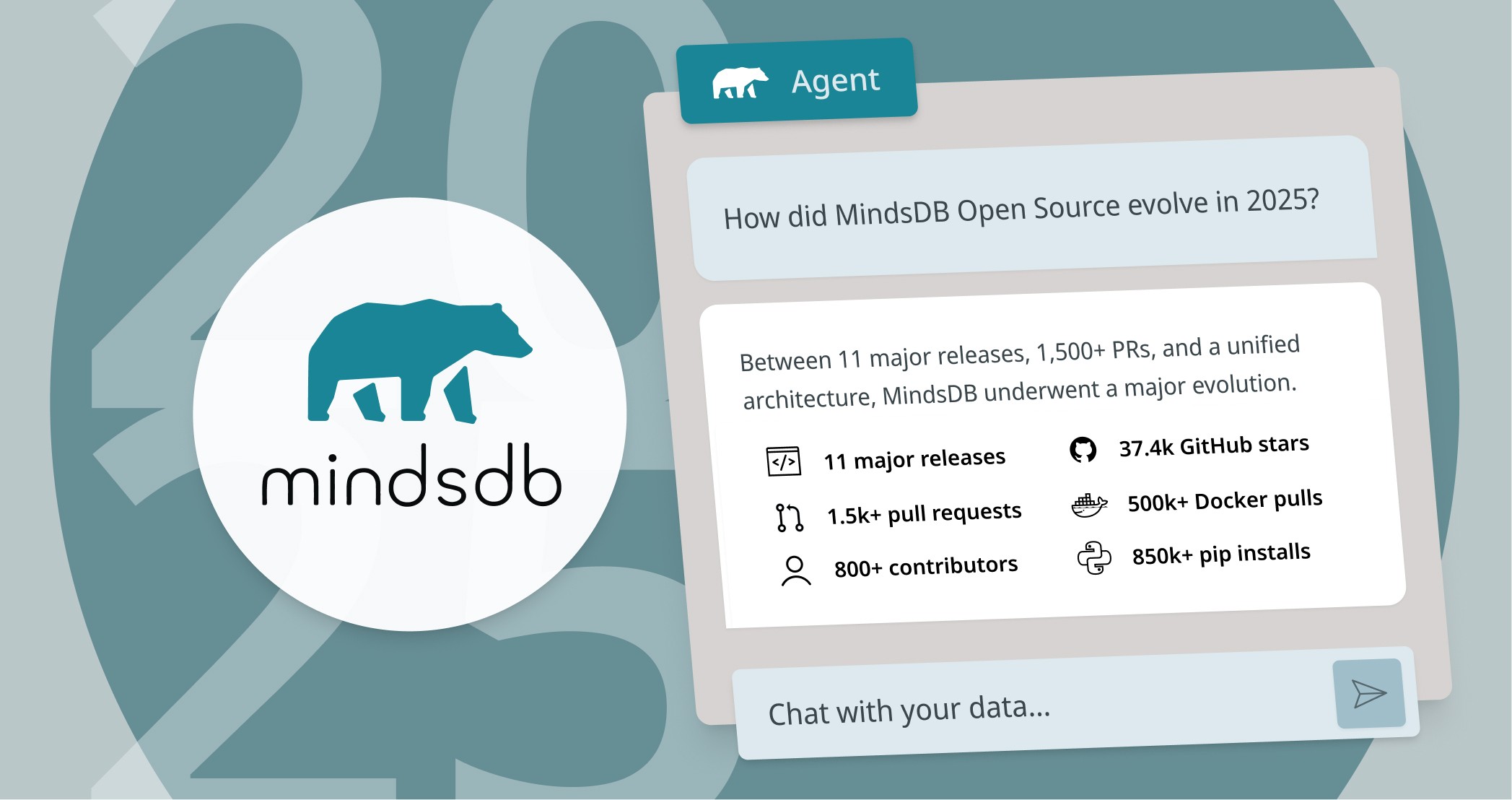

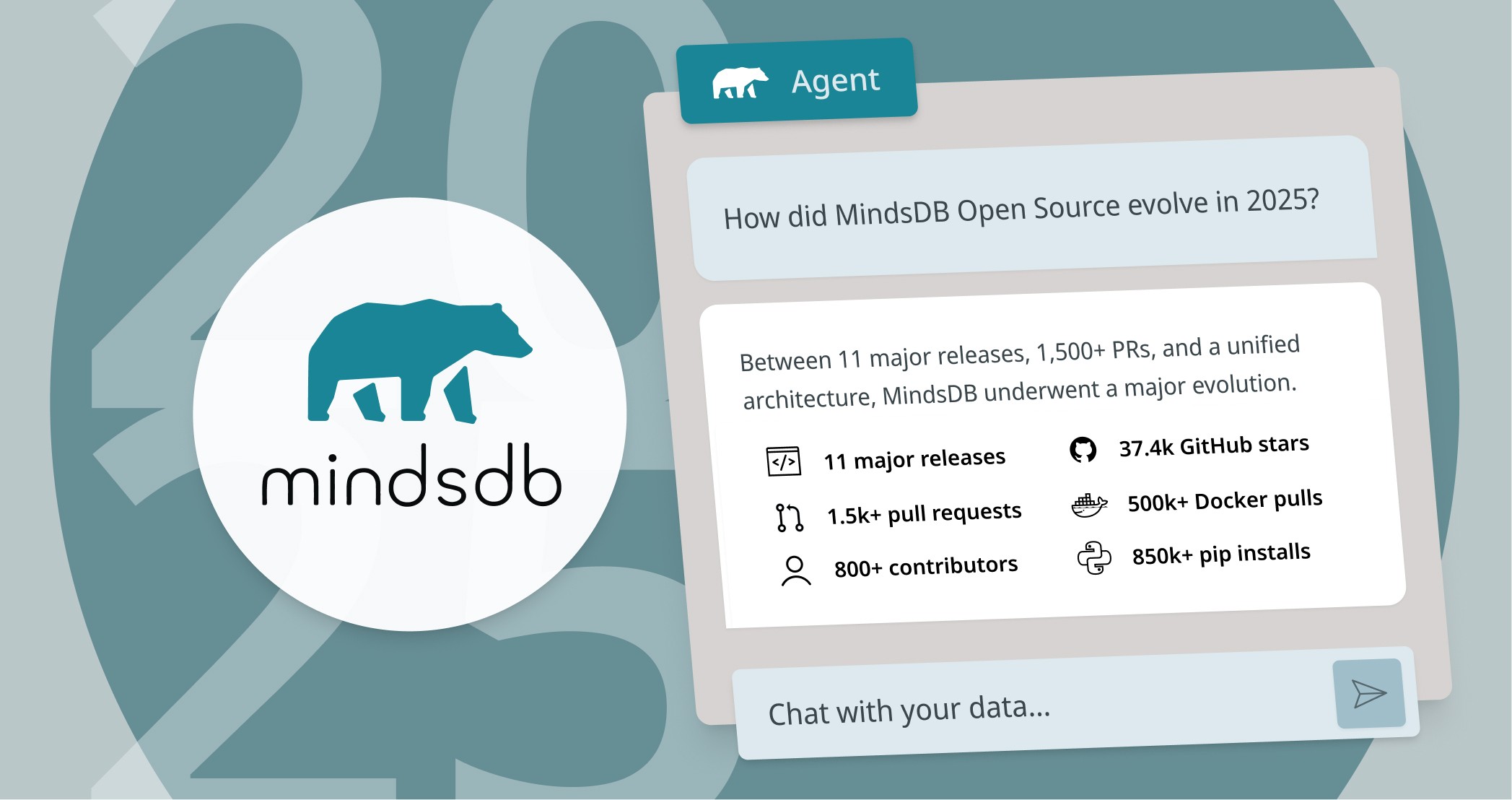

Dec 17, 2025

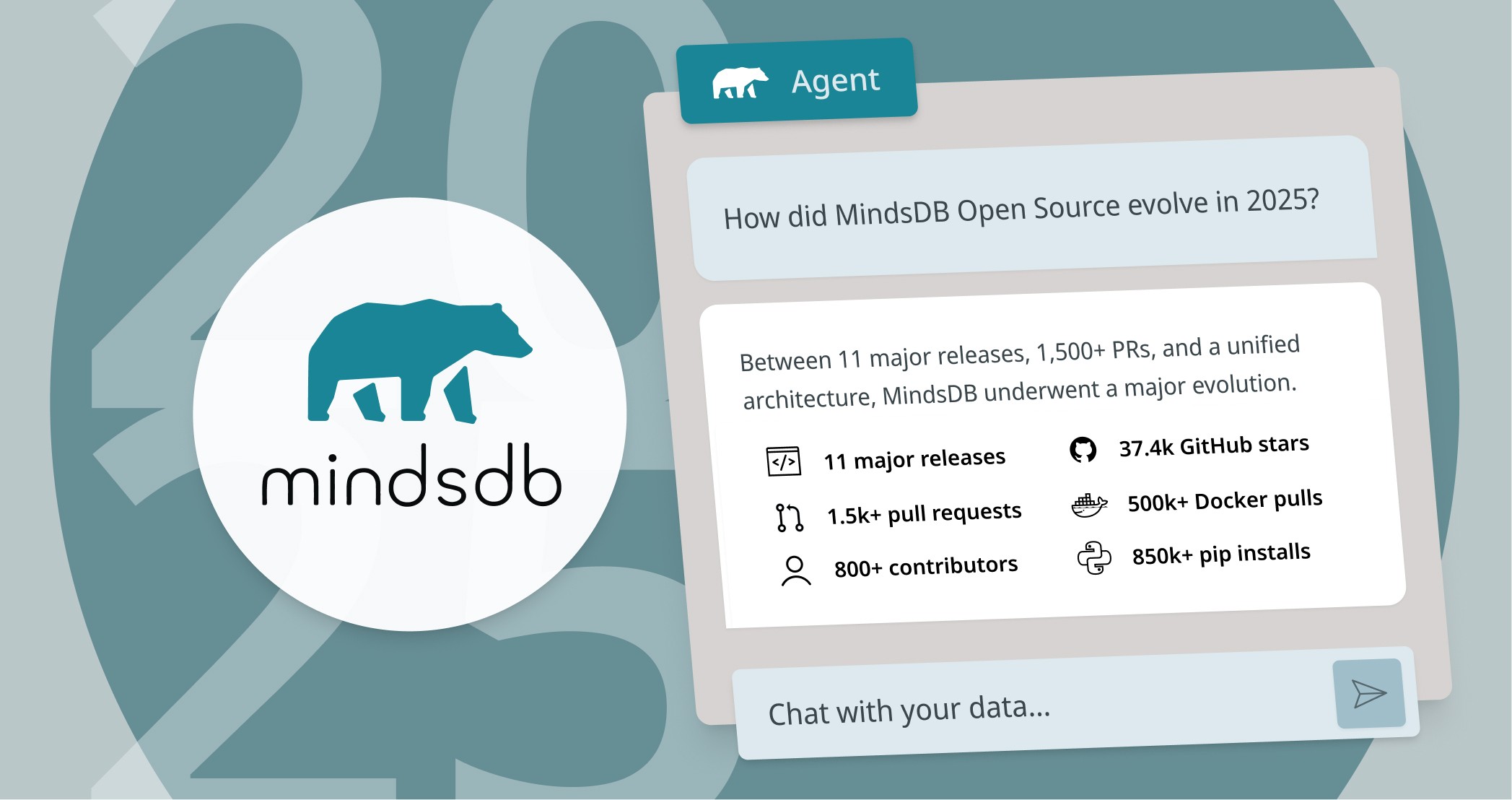

MindsDB in 2025: From SQL to the Universal AI Data Hub

MindsDB in 2025: From SQL to the Universal AI Data Hub