Subscribe to our Blog

More Posts

More Posts

Dec 17, 2025

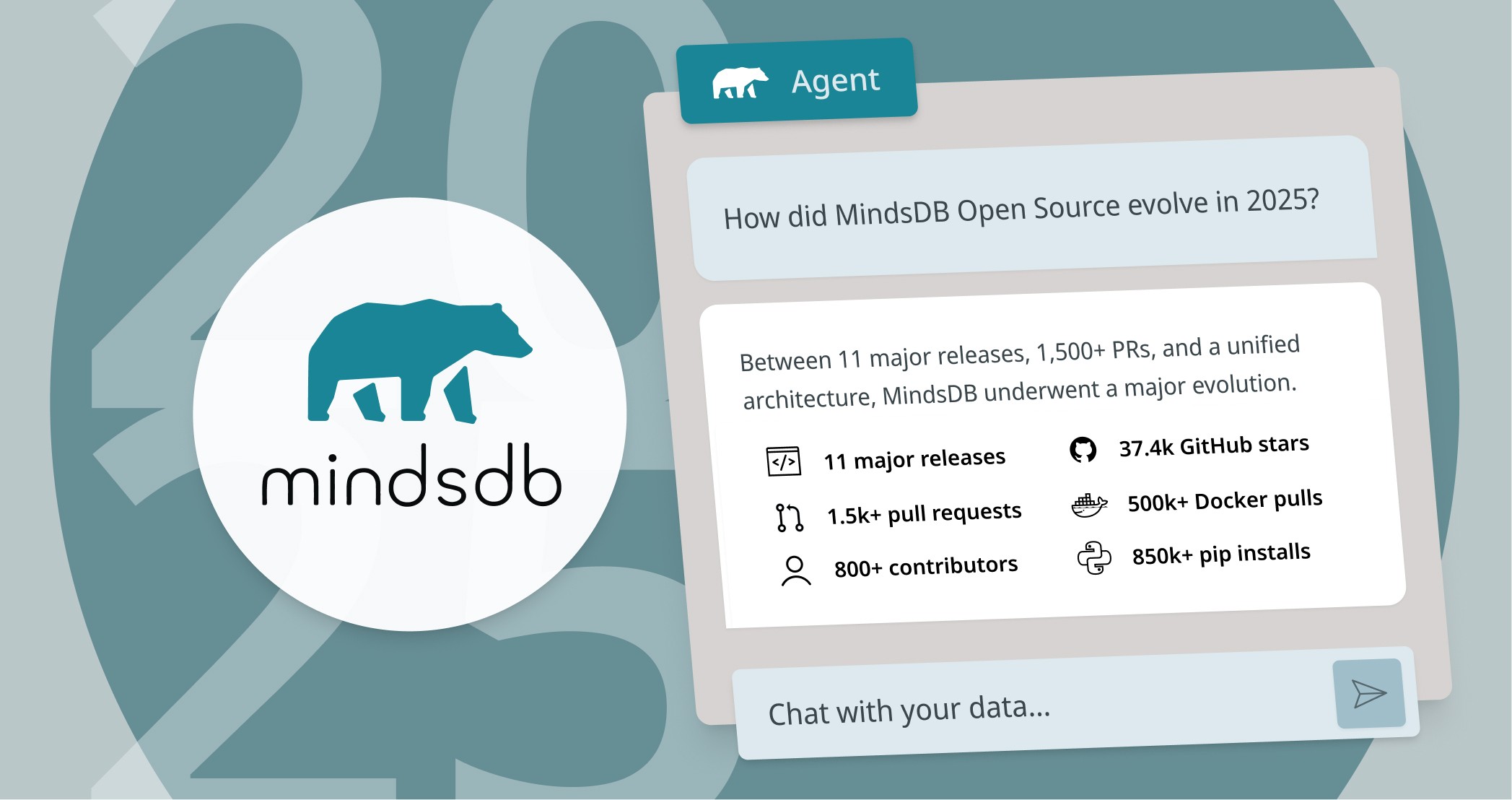

MindsDB Product Updates- December 2025

MindsDB Product Updates- December 2025

Dec 10, 2025

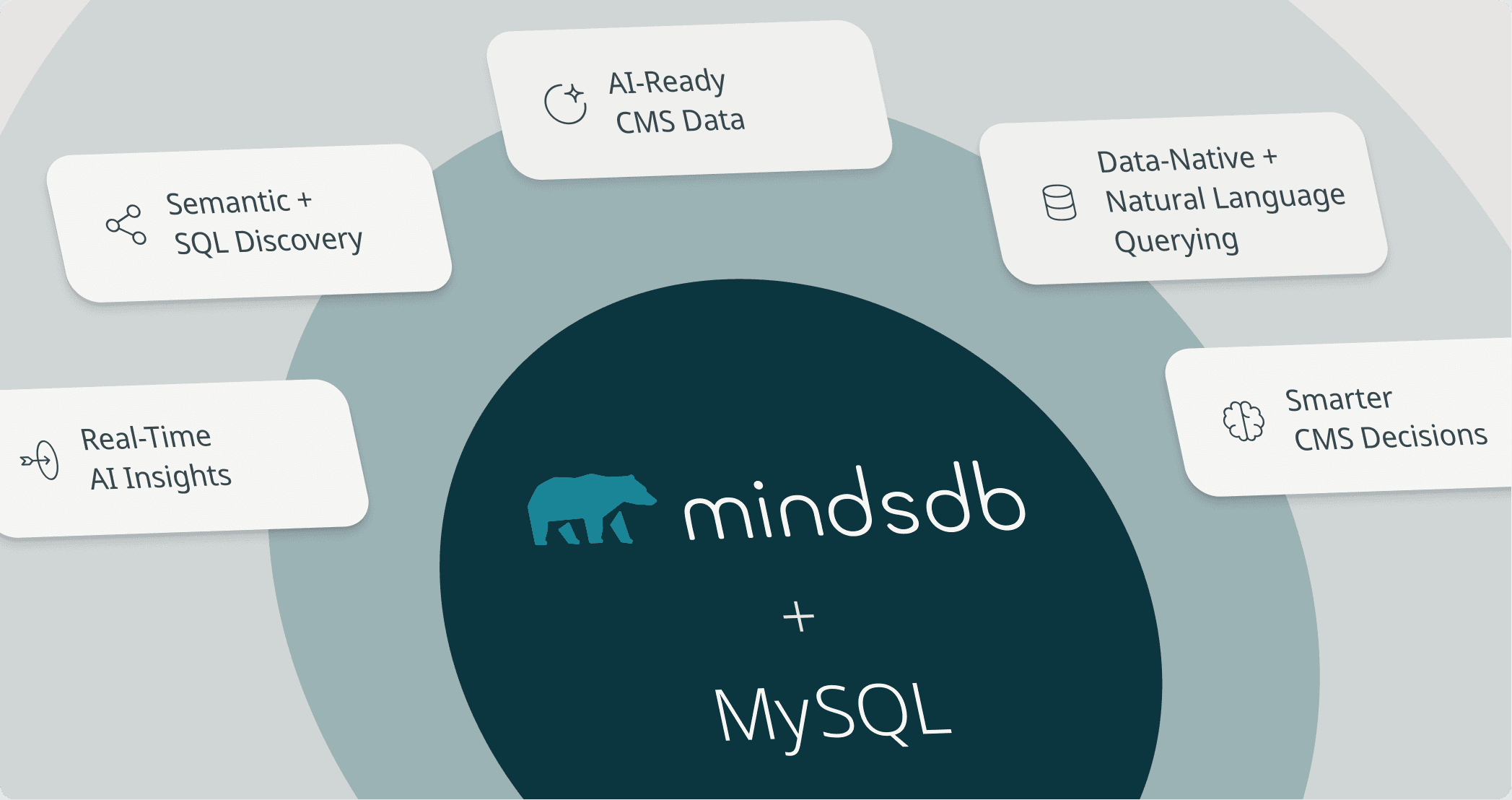

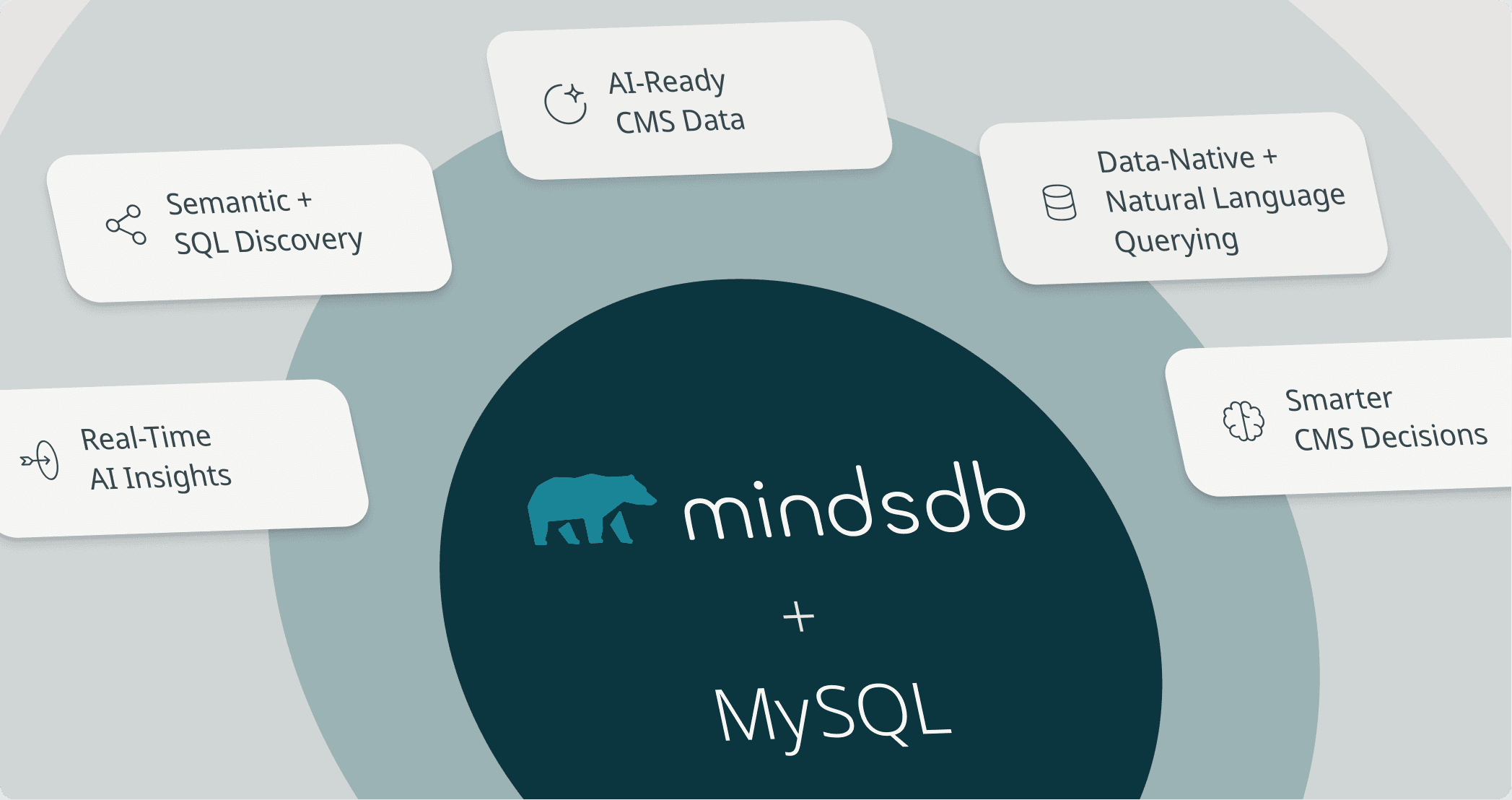

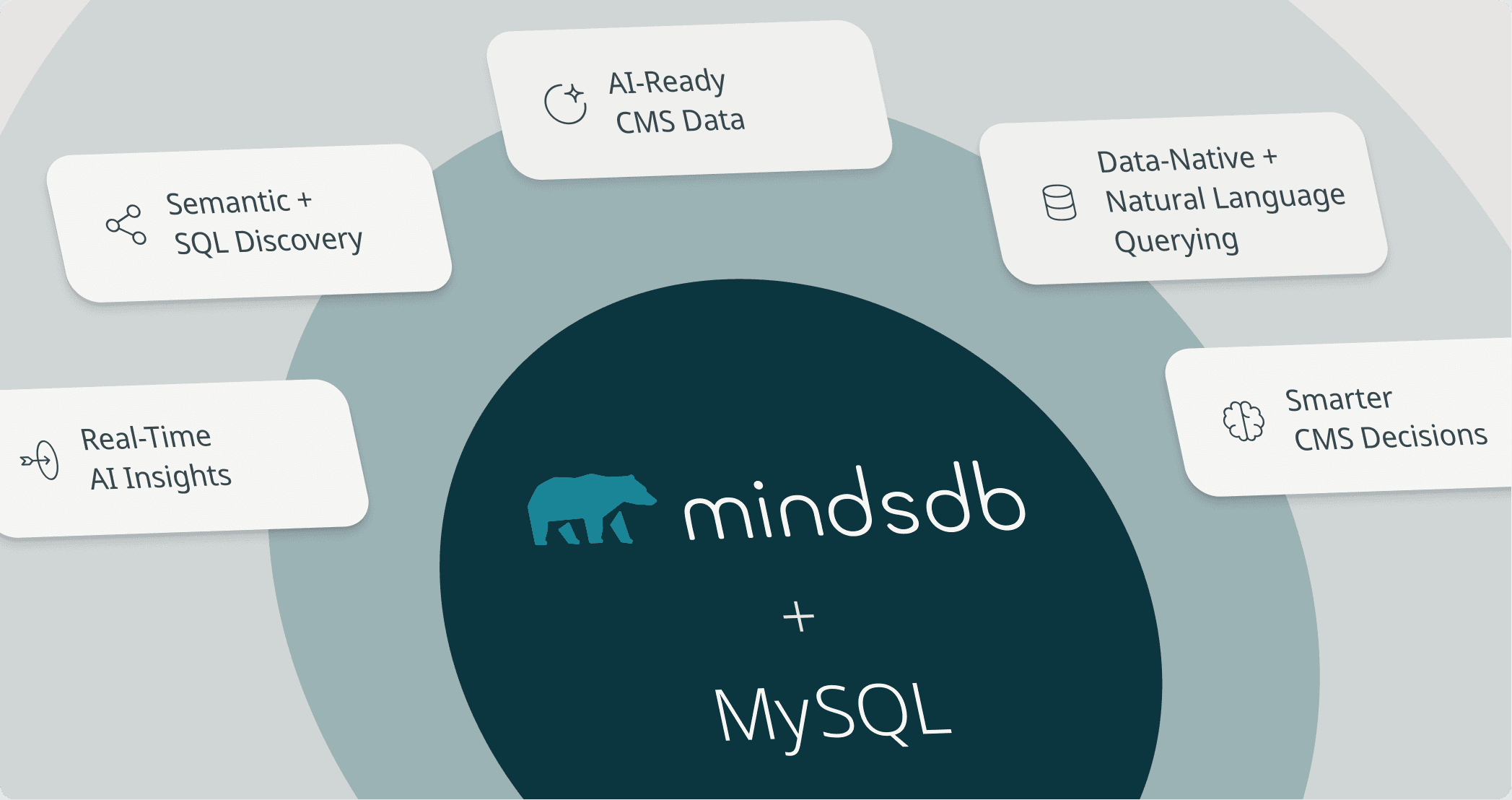

MySQL & MindsDB Unlocks Intelligent Content Discovery For Web CMS with Knowledge Bases and Cursor

MySQL & MindsDB Unlocks Intelligent Content Discovery For Web CMS with Knowledge Bases and Cursor

Dec 3, 2025

Area51 - Unifying Enterprise Knowledge Search with MindsDB

Area51 - Unifying Enterprise Knowledge Search with MindsDB

Dec 2, 2025

Building Janus: An AI Customer Support Helpdesk System Powered by MindsDB

Building Janus: An AI Customer Support Helpdesk System Powered by MindsDB

Nov 26, 2025

Blend Hybrid Retrieval with Structured Data using MindsDB Knowledge Bases

Blend Hybrid Retrieval with Structured Data using MindsDB Knowledge Bases

Nov 26, 2025

MindsDB Product Updates - November 2025

MindsDB Product Updates - November 2025